Tag: time series

Announcing the Beta Prerelease of the New Electronic World Treaty Index

What is the World Treaty Index?

The World Treaty Index (WTI), originally compiled by Peter Rohn in the 1960s and 1970s and subsequently maintained and updated at the University of Washington, is a comprehensive list of all known treaties formed during the twentieth century. This includes not only treaties formally registered with the United Nations (UNTS) but a significant number of unregistered agreements.

What information does the World Treaty Index provide?

The WTI provides information on the parties to the agreement, the general topic of the agreement (e.g. trade agreement, tax agreement, an arms control agreement, etc.), as well as the signing date and the date in force, and the volume and page containing the text of the agreement. Though the WTI does not provide the full text of each agreement, it is an excellent resource for identifying when a state (or states) formed a number of international agreements of a particular type. With a list of relevant agreements (including their volume and page number), an end user interested in obtaining the full text can simply collect them using the primary source material (i.e. UNTS, LTS, etc.)

Who is currently administrating the World Treaty Index?

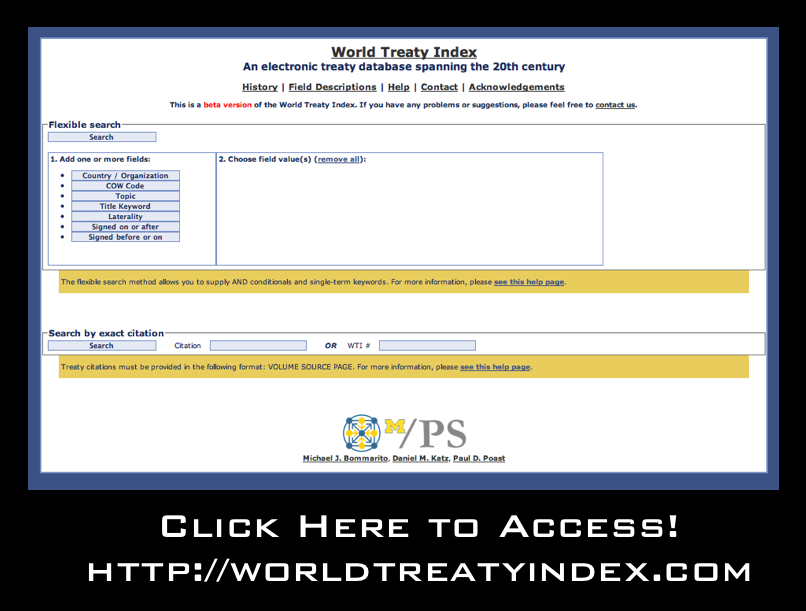

The Electronic WTI is now housed at the University of Michigan and administrated by Michael Bommarito, Daniel Martin Katz and Paul Poast. We are highlighting the newly constructedBeta Prerelease of the WTI website in an effort to obtain feedback prior to the official release. The currently available product provides access to information on more than 58,000bilateral and multilateral treaties formed between 1900 and 1997. When full coverage for the 20th century is complete, the database should feature in excess of 70,000 agreements.

What are some examples of searches I can conduct on the World Treaty Index website?

While the WTI should support all browsers, we suggest using Firefox. Below are three sample searches.

Search #1: Suppose a user would like to collect all agreements involving Brazil. Use the “flexible search” and follow three easy steps. (A) Select the country/organization field (B) within the country/organization field set the field value = Brazil (C) click the search button.

Search #2: Suppose a user would like to collect all agreements between Mexico and Spain. Use the “flexible search” and follow five easy steps. (A) Select the country/organization field (B) within the country/organization field set the field value = Mexico (C) Select a second country/organization field (B) within this new country/organization field set the field value = Spain (E) now click the search button.

Search #3: Suppose a user would like to know how many extradition agreements France signed between 1950 and 1962. This is similar to the examples above but involves the topic, signed on or after and signed before or on fields. After the user chooses the proper search fields and selects the search information, the WTI will produce on the screen a list of the desired agreements and provide the option of downloading the list as a CSV file.

When will it feature full coverage for the entire 20th Century?

By the end of 2010, we will add (1) all bilateral treaties formed between 1900 and 1944 [Now Mostly Done] (2) all bilateral treaties formed between 1998 and 1999, and (3) all multilateral agreements and a list of all parties to each multilateral agreement. If you know of an agreement that is not ultimately featured on the site please contact us and we will be happy to add it to the list.

How can I learn more about the World Treaty Index?

For a general history of the World Treaty Index, visit the “History Page” on the worldtreatyindex.com website. For a more detailed treatment please see: Glenda Pearson, Rohn’s World Treaty Index: Its Past and Future, 29 International Journal of Legal Information 543 (2001).

What additional extensions of the Electronic World Treaty Index are planned?

As noted above, our initial goal is provide complete coverage of all known agreements in the 20th Century. Planned extensions include bringing the World Treaty forward so as to provide coverage up to 2010. In addition, we plan to collect information regarding treaty terminations. Finally, we would like to enhance the granularity of our topic codes and allow for agreements with multiple dimensions to feature multiple topic codes.

Feedback?

At this point, we have only offered a beta pre-release of the site. Thus, we would really appreciate your feedback, etc. Please email us at worldtreatyindex@gmail.com if you have any thoughts about how to improve the site.

Cash for Clunkers – Visualization and Analysis

Cash for Clunkers: A Dynamic Map of the Cash Allowance Rebate Systems (CARS)

Some Background on the Car Allowance Rebate System (CARS)

From the official July 27, 2009 press release – “The National Highway Traffic Safety Administration (NHTSA) also released the final eligibility requirements to participate in the program. Under the CARS program, consumers receive a $3,500 or $4,500 discount from a car dealer when they trade in their old vehicle and purchase or lease a new, qualifying vehicle. In order to be eligible for the program, the trade-in passenger vehicle must: be manufactured less than 25 years before the date it is traded in; have a combined city/highway fuel economy of 18 miles per gallon or less; be in drivable condition; and be continuously insured and registered to the same owner for the full year before the trade-in. Transactions must be made between now [July 27, 2009] and November 1, 2009 or until the money runs out.”

On August 6, 2009, Congress extended the program adding $2 billion dollars to the program’s initial allocation. For those interested in background, feel free to read the CNN report on the program extension.

On August 13, 2009, the Secretary offered this press release noting “[T]he Department of Transportation today clarified that consumers who want to purchase new vehicles not yet on dealer lots can still be eligible for the CARS program. Dealers and consumers who have reached a valid purchase and sale agreement on a vehicle already in the production pipeline will be able to work with the manufacturer to receive the documentation needed to qualify for the program.”

On August 20, 2009, the Secretary announced the program would end on August 24, 2009 at 8pm EST. While this remained the deadline for sales, dealers were provided a small extension to file paperwork ( Noon on August 25, 2009). For those interested, all other press releases are available here.

The Cars.gov DataSet

The full data set is available for download here. From the Cars.gov website “these reports contain the transaction level information entered by participating dealers for the 677,081 CARS transactions that were paid or approved for payment as of Friday, October 16, 2009 at 3:00PM EDT for a total of $2,850,162,500. Please note that confidential financial or commercial information and consumer information protected under the DOT privacy policy has been redacted.” The official cars.gov website offers additional caveats on its note to analysts. One important thing to note, there is a statutory exemption which allowed transactions to occur pursuant to an amended rule after the August 24, 2009 termination date. Here is the relevant language of the amended rule:

“To qualify for the exception process, a dealer must have been prevented from submitting an application for reimbursement due to a hardship caused by the agency. Specifically, a dealer may request an exception if the dealer was locked out of the CARS system, contacted NHTSA for a password reset prior to the announced deadline, but did not receive a password reset. A dealer also may request an exception if its timely transaction was rejected by the CARS system due to a duplicate State identification number, trade-in vehicle VIN, or new vehicle VIN that was never used for a submitted CARS transaction, if the dealer contacted NHTSA prior to the announced deadline to resolve the issue but did not receive a resolution. Finally, a dealer may seek an exception if it was prevented from submitting a transaction by the announced deadline due to another hardship attributable to NHTSA’s action or inaction, upon submission of proof and justification satisfactory to the Administrator.”

For those who have downloaded the full set, the above passage explains why there exist transaction data which fall outside of the general CARS program window.

Dynamic Visualization of the Spatial Distribution of Sales

Each time step of the animation represents a day for which there exists data in the CARS official dataset. While the program officially started on July 27, 2009, the dataset contains both transactions undertaken during the pilot program as well as transactions undertaken pursuant the exemption process described above. Thus, the movie begins with the first unit of observation on July 1, 2009 and terminates with the final transaction on October 24, 2009. Similar to a flip book, the movie is generated by threading together each daily time slice.

The Size and Color of Each Circle

Each circle represents a zip code in which one or more participating dealerships is located. The radius of a given circle is function of the number of CARS related sales in a given zip code as of the date in question. In each day, the circle is colored if there is at least one sale in the current period while the circle is resized based upon the number of sales in the given period.

In the later days of the data window, particular those after official August 25 termination of the program, the daily sales are fairly negligible. However, as outlined in the dataset description above, each participating institution who qualified for the exemption was allowed to submit transactions beyond official program termination date. Notice the cumulative percentage of sales reach nearly all total sales by August 25th. Virtually all sales occur during the official July 27, 2009 – August 24, 2009 window. Thus, while these the stragglers caused certain circles to remain illuminated the size of circles is essentially fixed after August 24, 2009.

Some Things to Notice in the Visualization

In the lower left corner of the video, you will notice two charts. The chart on the left tracks the contribution to total sales for the given day. The chart on the right represent the cumulative percentage of sales to date under the program. Not surprisingly, most of the transactions under the CARS program take place between July 27, 2009 – August 24, 2009 time window.

Within this window, the daily sales feature a variety of interesting trends. During each Sunday of the program (i.e. August 2nd, August 9th, August 16th & August 23rd) sales were significantly diminished. Not surprisingly, the end of week and early weekend sales tend to be the strongest.

In the very early days of the program, there were a variety of media reports (e.g. here, here, here) highlighting the quickly dimishing resources under the program. Obviously, it is difficult to determine the underlying demand for the program. However, given the extent of the acceleration, it appears these reports contributed to the rapid depletion of the initial 1 billion dollars allocated under the program. A similar but less pronounced form of herding also accompanied the last days of the CARS program.

Dynamic Animation of the East Anglia Climate Research Unit Email Network

FULL SCREEN FOR BETTER VIEWING!

Click on this icon to view the Movie in Full Screen Mode!![]()

STATIC SNAPSHOT TO DYNAMIC ANIMATION

In our prior post analyzing the email database of Climate Research Unit at the University of East Anglia, we aggregated all emails over the relevant 1997-2009 time period into a single static visualization. Specifically, to build the network, we processed every email in the leaked data. Each email contains a sender and at least one recipient on the To:, Cc:, or Bcc: line.

One obvious shortcoming associated with producing a static snapshot for data set, is that it often obscures the time evolving dynamics of interaction which produced the full graph. To generate a dynamic picture, it is necessary to collect time stamped network data. In the current case, this required acquisition of the date field for each of the emails. With this information, we used the same underlying data to generate a dynamic network animation for the 1997-2009 time window.

HOW TO INTERPET THE MOVIE

Consistent with the approach offered in our prior visualization, each node represents an individual within the email dataset while each connection reflects the weighted relationship between those individuals. The movie posted above features the date in the upper left. As time ticks forward, you will notice that the relative social relationships between individuals are updated with each new batch of emails. In some periods, this updating has significant impact upon the broader network topology and at other time it imposes little structural consequences.

In each period, both new connections as well as new communications across existing connections are colored teal while the existing and dormant relationships remain white. Among other things, this is useful because it identifies when a connection is established and which interactions are active at any given time period.

A SHORT VERSION AND A LONG VERSION

We have two separate versions of the movie. The version above is a shorter version where roughly 13 years is displayed in under 2 minutes. In the coming days, we will have a longer version of the movie which ticks a one email at a time. In both versions, each frame is rendered using the Kamada-Kawai layout algorithm. Then, the frames are threaded together using linear interpolation.

SELECTION EFFECTS

Issues of selection of confront many researchers. Namely, given the released emails are only a subset of the broader universe of emails authored over the relevant time window, it is important to remember that the data has been filtered and the impact of this filtration can not be precisely determined. Notwithstanding this issue, our assumption is that every email from a sender to a recipient represents a some level of relationship between them. Furthermore, we assume that more emails sent between two people generally indicates a stronger relationship between those individuals.

DIMENSIONALITY

In our academic scholarship, we have confronted questions of dimensionality in network data. Simply put, analyzing network data drawn from high dimensional space can be really thorny. In the current context, a given email box likely contains emails on lots of subjects and reflects lots of people not relevant to the specific issue in question. Again, while we do not specifically know the manner in which the filter was applied, it is certainly possible that the filter actually served to mitigate issues of dimensionality.

ACCESS THE DATA

For those interested in searching the emails, the NY Times directs the end user to http://www.eastangliaemails.com/

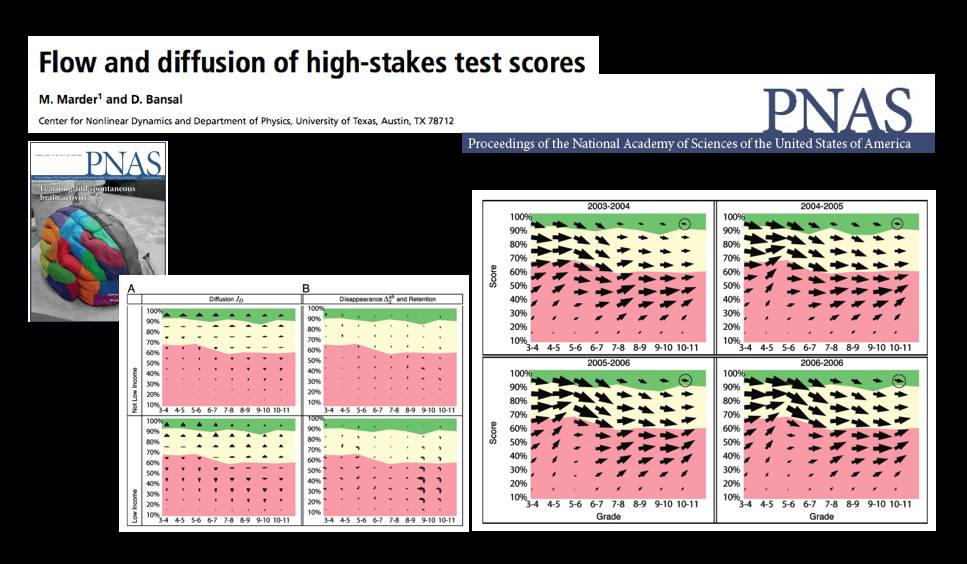

A Statistical Mechanics Take on No Child Left Behind — Flow and Diffusion of High-Stakes Test Scores [From PNAS]

The October 13th Edition of the Proceedings of the National Academy of Science features a very interesting article by Michael Marder and Dhruv Bansal from the University of Texas.

From the article … “Texas began testing almost every student in almost every public school in grades 3-11 in 2003 with the Texas Assessment of Knowledge and Skills (TAKS). Every other state in the United States administers similar tests and gathers similar data, either because of its own testing history, or because of the Elementary and Secondary Education Act of 2001 (No Child Left Behind, or NCLB). Texas mathematics scores for the years 2003 through 2007 comprise a data set involving more than 17 million examinations of over 4.6 million distinct students. Here we borrow techniques from statistical mechanics developed to describe particle flows with convection and diffusion and apply them to these mathematics scores. The methods we use to display data are motivated by the desire to let the numbers speak for themselves with minimal filtering by expectations or theories.

The most similar previous work describes schools using Markov models. “Demographic accounting” predicts changes in the distribution of a population over time using Markov models and has been used to try to predict student enrollment year to year, likely graduation times for students, and the production of and demand for teachers. We obtain a more detailed description of students based on large quantities of testing data that are just starting to become available. Working in a space of score and time we pursue approximations that lead from general Markov models to Fokker–Planck equations, and obtain the advantages in physical interpretation that follow from the ideas of convection and diffusion.”

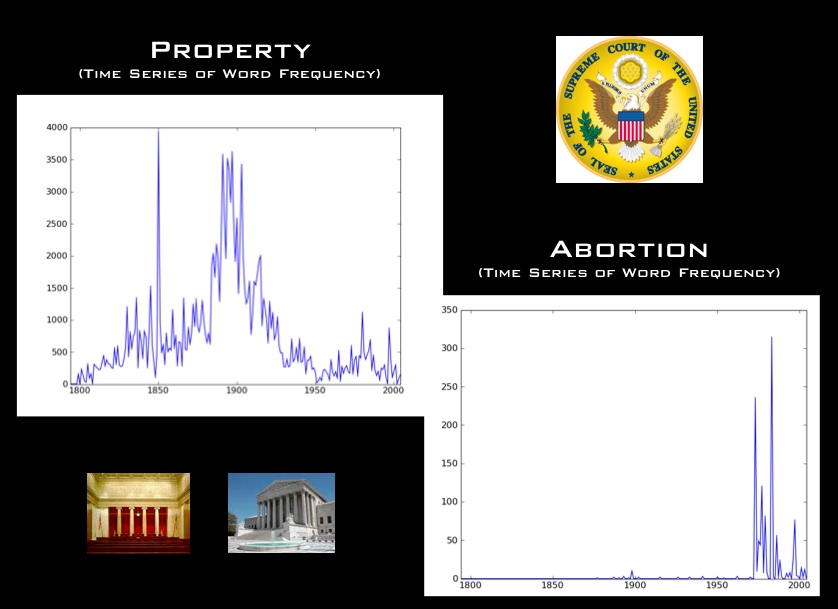

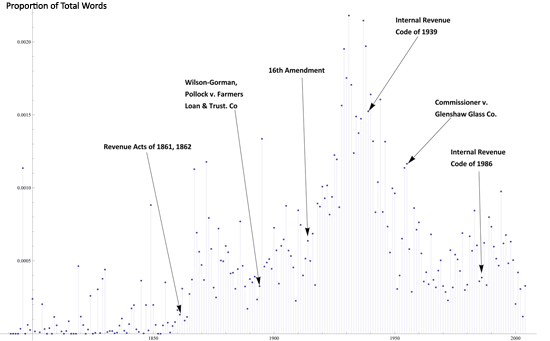

Tax Day! A First-Order History of the Supreme Court and Tax

Click to view the full image.

In honor of Tax Day, we’ve produced a simple time series representation of the Supreme Court and tax. The above plot shows the how often the word “tax” occurs in the cases of the Supreme Court, for each year – that is, what proportion of all words in every case in a given year are the word “tax.” The data underneath includes non-procedural cases from 1790 to 2004. The arrows highlight important legislation and cases for income tax as well.

Make sure to click through the image to view the full size.

Happy Tax Day!

When is the first term enough?: On approximation in social science

Research in the academic world suffers from the “hammer problem” – that is, the methods we use are often those that we have in our toolbox, not necessarily those that we should be using. This is especially true in computational social science, where we often attempt to directly import well-developed methods from the hard sciences.

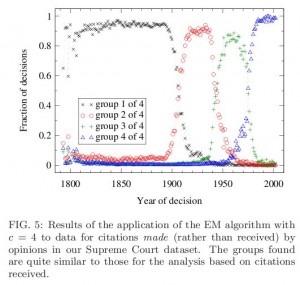

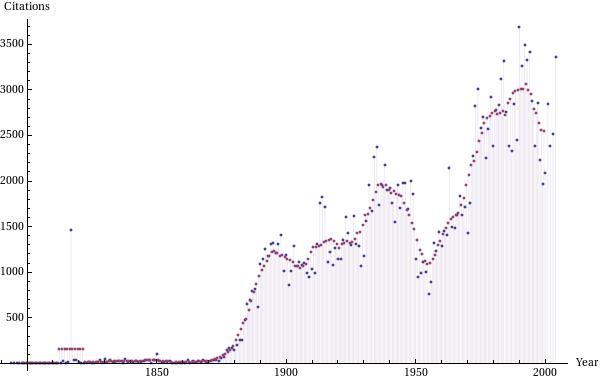

To prove the point, I’d like to highlight one example we’ve come across in our research. In Leicht et al’s Large-scale structure of time evolving citation networks, the authors apply two methods to a simplified representation of the United States Supreme Court citation network. Both of these methods rely on complicated statistical algorithms and require iterative non-linear system solvers. However, the results are consistent, and they detect “events” around 1900, 1940, and 1970.

One first-order alternative to detecting significant “events” in the Court would be to count citations. One might suspect, for instance, that the formation or destruction of law might go hand-in-hand with an acceleration or deceleration in the rate of citation. Such a method is purely conjectural, but costs much less to implement than the methods discussed above.

This figure shows the number of outgoing citations per year in blue, as well as the ten-year moving average in purple. The plot shows jumps that coincide very well with the plot from Leicht, et. al. Thus, although only a first-order approximation to the underlying dynamics, this method would lead historians down a similar path with much less effort.

This example, though simple, is one that really hits home for me. After a week of struggling to align interpretations and methods, this plot convinced me more than any eigenvector or Lagrangian system. Perhaps more importantly, unlike the above methods, you can explain this plot to a lay audience in a fifteen minute talk.