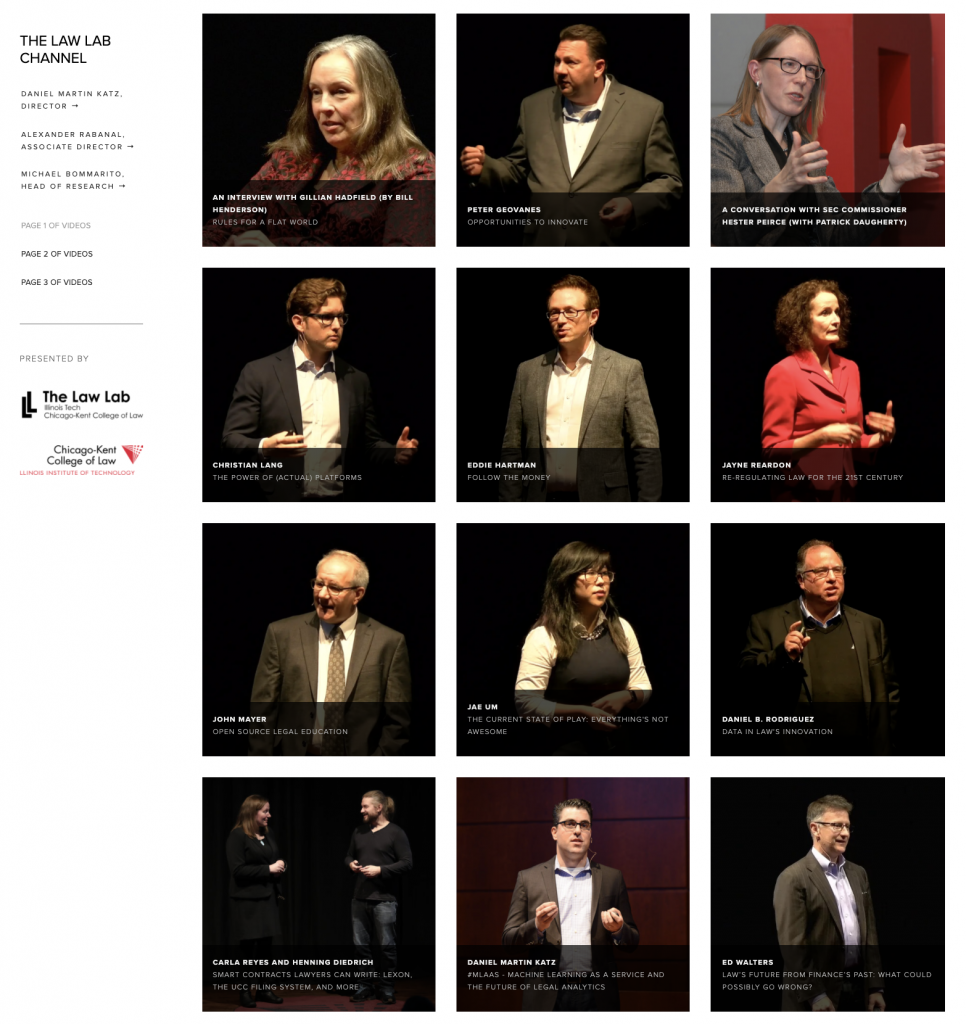

Over the past few years, we have hosted a number of conferences devoted to various sub-topics in legal innovation including The Make Law Better Conference, Fin Legal Tech Conference and the Block Legal Tech Conference. We have aggregated videos from these events on TheLawLabChannel.com for you to enjoy at your convenience.