Tag: data mining

Deb Roy: The Birth of a Word [TED 2011]

This is one of the better TED Talks I have seen to date. It is definitely worth watching!

From the abstract: MIT researcher Deb Roy wanted to understand how his infant son learned language — so he wired up his house with videocameras to catch every moment (with exceptions) of his son’s life, then parsed 90,000 hours of home video to watch “gaaaa” slowly turn into “water.” Astonishing, data-rich research with deep implications for how we learn.

For an interesting related talk, check out Patricia Kuhl– The linguistic genius of babies (TEDxRanier).

Thomas Goetz: It’s Time to Redesign Medical Data [TEDMed]

The AI Revolution Is On [ Via Wired Magazine ]

From the Full Article: “AI researchers began to devise a raft of new techniques that were decidedly not modeled on human intelligence. By using probability-based algorithms to derive meaning from huge amounts of data, researchers discovered that they didn’t need to teach a computer how to accomplish a task; they could just show it what people did and let the machine figure out how to emulate that behavior under similar circumstances. … They don’t possess anything like human intelligence and certainly couldn’t pass a Turing test. But they represent a new forefront in the field of artificial intelligence. Today’s AI doesn’t try to re-create the brain. Instead, it uses machine learning, massive data sets, sophisticated sensors, and clever algorithms to master discrete tasks. Examples can be found everywhere …”

Introduction to Computing for Complex Systems — ICPSR 2010 — My Full Course Slides Available Online!

I am going to bump this post to front of the blog one last time as there has been some interest in this material. It has now been several weeks since we completed the full four week class here at the ICPSR Program in Quantitative Methods. In this course, I (together with my colleagues) highlight the methods of complex systems as well as several environments designed to explore the field. These include Netlogo (agent based models and network models), Vensim (system dynamics / ecological modeling) and Pajek (empirical network analysis). In the final week, we cover a variety of advanced topics:

- (a) Community Detection in Networks

- (b) Computational Linguistics / Natural Language Processing

- (c) Diffusion Models and Mathematical Modeling with Data

- (d) Exponential Random Graph (p*) Models

- (e) Information Retrieval / Webscraping

Although, we do not work with more advanced languages within the course, those who need to conduct complex analysis are directed to alternatives such as R, Python, Java, etc.

Anyway, the slides are designed to be fully self-contained and thus allow for individually paced study of the relevant material. If you work through the slides carefully you should be able to learn the software as well as many of the core principles associated with the science of complex systems. The material should be available online indefinitely. If you have questions, feel free to email me.

High Throughput Humanities – ECCS Satellite Conference (Lisboa 2010)

As we speak, I am currently in route to Portugal for the very exciting High Throughput Humanities meeting on Wednesday. As we believe it fits well within the goals of the meeting, I will briefly present our work on the United States Code (including a brief preview of our still unreleased new paper). Anyway, for those not familiar with the meeting, if you click on the image above you will be taken to the main page. Also, here is the announcement for the meeting:

“The High Throughput Humanities satellite event at ECCS’10 establishes a forum for high throughput approaches in the humanities and social sciences, within the framework of complex systems science. The symposium aims to go beyond massive data aquisition and to present results beyond what can be manually achieved by a single person or a small group. Bringing together scientists, researchers, and practitioners from relevant fields, the event will stimulate and facilitate discussion, spark collaboration, as well as connect approaches, methods, and ideas.

The main goal of the event is to present novel results based on analyses of Big Data (see NATURE special issue 2009), focusing on emergent complex properties and dynamics, which allow for new insights, applications, and services.

With the advent of the 21st century, increasing amounts of data from the domain of qualitative humanities and social science research have become available for quantitative analysis. Private enterprises (Google Books and Earth, Youtube, Flickr, Twitter, Freebase, IMDb, among others) as well as public and non-profit institutions (Europeana, Wikipedia, DBPedia, Project Gutenberg, WordNet, Perseus, etc) are in the process of collecting, digitizing, and structuring vast amounts of information, and creating technologies, applications, and services (Linked Open Data, Open Calais, Amazon’s Mechanical Turk, ReCaptcha, ManyEyes, etc), which are transforming the way we do research.

Utilizing a complex systems approach to harness these data, the contributors of this event aim to make headway into the territory of traditional humanities and social sciences, understanding history, arts, literature, and society on a global-, meso- and granular level, using computational methods to go beyond the limitations of the traditional researcher.”

Recorded Future – A Temporal Analytics Engine

The Recorded Future Temporal Analytics Engine relies upon three steps to serve up information:

1. Scour the web: We continually scan thousands of news publications, blogs, niche sources, trade publications, government web sites, financial databases and more.

2. Extract, rank and organize: We extract information from text including entities, events, and the time that these events occur. We also measure momentum for each item in our index, as well as sentiment.

3. Make it accessible and useful: You can explore the past, present and predicted future of almost anything. Powerful visualization tools allow you to quickly see temporal patterns, or link networks of related information.

Computational World Cup

The Financial Times’s Alphaville blog recently covered a number of quantitative models for predicting World Cup outcomes – models developed by well-known “quant” desks. Though this may seem like a waste of brains and shareholder value, World Cup outcomes are historically predictive of regional equity performance; furthermore, recent trends in securitization have not passed over sports as large as soccer. Here are the respective desks’ picks:

The Financial Times’s Alphaville blog recently covered a number of quantitative models for predicting World Cup outcomes – models developed by well-known “quant” desks. Though this may seem like a waste of brains and shareholder value, World Cup outcomes are historically predictive of regional equity performance; furthermore, recent trends in securitization have not passed over sports as large as soccer. Here are the respective desks’ picks:

- JPM: England 1st, Spain 2nd, Netherlands 3rd (notes)

- UBS: Brazil 1st, Germany 2nd, Italy 3rd (notes, p. 37)

- GS: England, Argentina, Brazil, Spain (unranked) (notes, p. 71)

- Dankse Bank: Brazil 1st, Germany 2nd (notes)

As could be expected, there is some disagreement as to the value of these predictions. Gary Jenkins of Evolution Securities chimes in with his own thoughts:

Yes it’s that time again when analysts like me who can barely predict what is going to happen in the market the following day turn away from our area of so called expertise and instead focus our attention on who is going to win the World Cup. I first got involved in this attempt to get some publicity 8 years ago, when Goldman Sachs produced a report combining economics and the World Cup and included their predictions as to who would get to the last four (I believe they got them all wrong) and had Sir Alex Ferguson pick his all time best World Cup team. I decided to do the same thing but had to explain that we could not afford Sir Alex. Thus I got my dad to pick his all time team. It caused more client complaints than most of my research and my favourites to win the tournament got knocked out early, so I abandoned this kind of research for a while.

Again, for more interesting coverage of the real-world effects of the World Cup, see FT Alphaville’s South Africa 2010 series. P.S. Go Azzurri this afternoon!

Bursts: The Hidden Pattern Behind Everything We Do

Albert-László Barabási, in his usual creative fashion, has produced an interesting game to help publicize his new book, Bursts: The Hidden Pattern Behind Everything We Do.

Read their description of the game below and check it out if you’re interested!

BuRSTS is a performance in human dynamics, a game of cooperation and prediction, that will gradually unveil the full text of Bursts. In a nutshell, if you register at http://brsts.com, you will be able to adopt one of the 84,245 words of the book. Once you adopt, the words adopted by others will become visible to you — thus as each words finds a parent, the whole book will become visible to the adopters. But if you invite your friends (and please do!) and you are good at predicting hidden content, the book will unveil itself to you well before all words are adopted. We will even send each day free signed copied of Bursts to those with the best scores.

Data on the Legal Blogosphere [Via the Library of Congress]

From the LOC Website … “The Law Library of Congress began harvesting legal blawgs in 2007. The collection has grown to more than one hundred items covering a broad cross section of legal topics. Blawgs can also be retrieved by keywords or browsed by subject, name, or title.” To access our visualization of the legal blogosphere (pictured above) … please click here.

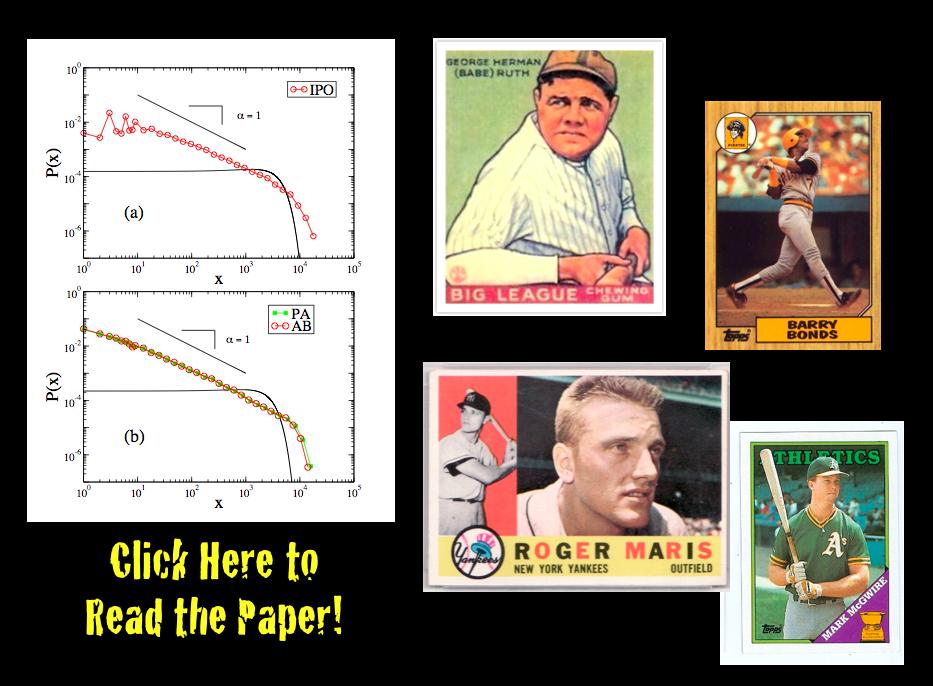

Detrending Career Statistics in Professional Baseball: Accounting for the Steroids Era and Beyond

From the abstract: “There is a long standing debate over how to objectively compare the career achievements of professional athletes from different historical eras. Developing an objective approach will be of particular importance over the next decade as Major League Baseball (MLB) players from the “steroids era” become eligible for Hall of Fame induction. Here we address this issue, as well as the general problem of comparing statistics from distinct eras, by detrending the seasonal statistics of professional baseball players. We detrend player statistics by normalizing achievements to seasonal averages, which accounts for changes in relative player ability resulting from both exogenous and endogenous factors, such as talent dilution from expansion, equipment and training improvements, as well as performance enhancing drugs (PED). In this paper we compare the probability density function (pdf) of detrended career statistics to the pdf of raw career statistics for five statistical categories — hits (H), home runs (HR), runs batted in (RBI), wins (W) and strikeouts (K) — over the 90-year period 1920-2009. We find that the functional form of these pdfs are stationary under detrending. This stationarity implies that the statistical regularity observed in the right-skewed distributions for longevity and success in professional baseball arises from both the wide range of intrinsic talent among athletes and the underlying nature of competition. Using this simple detrending technique, we examine the top 50 all-time careers for H, HR, RBI, W and K. We fit the pdfs for career success by the Gamma distribution in order to calculate objective benchmarks based on extreme statistics which can be used for the identification of extraordinary careers.”