Tag: data mining

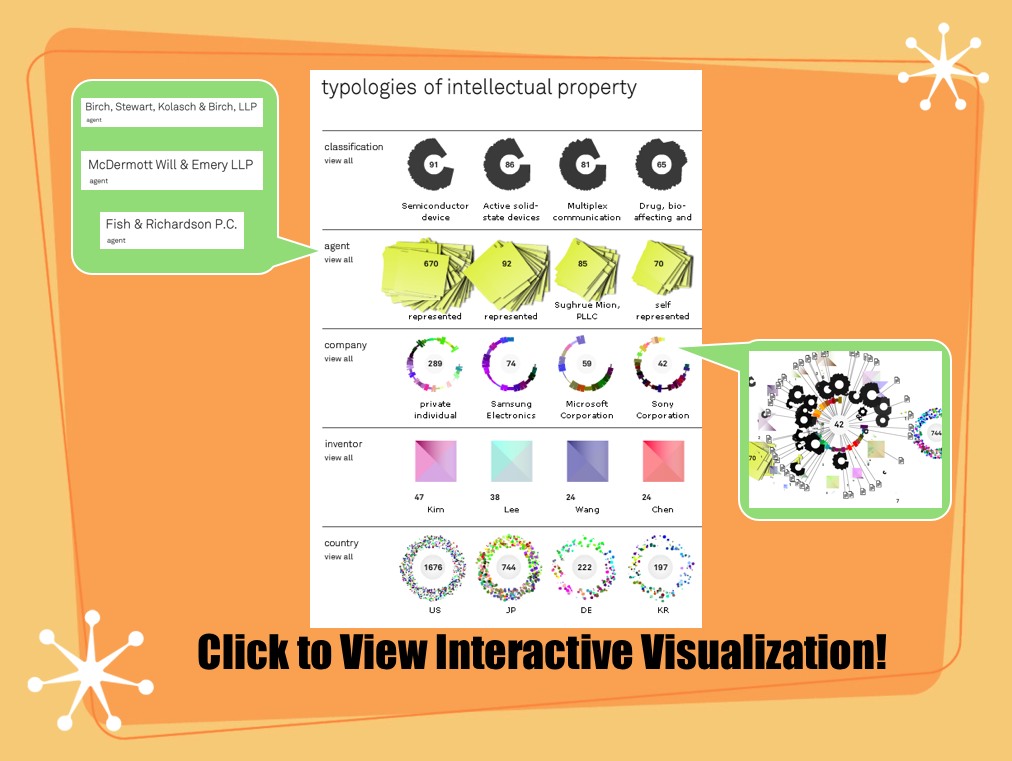

Real Time Visualization of US Patent Data [Via Infosthetics]

Using data dating back to 2005 and updating weekly using information from data.gov the Typologies of Intellectual Property project created by information designer Richard Vijgen offers almost real time visualization of US Patent Data.

From the documentation … “[T]ypologies of intellectual property is an interactive visualization of patent data issued by the United States Patent and Trademark Office. Every week an xml file with about 3000 new patents is published by the USTPO and made available through data.gov. This webapplication provides a way to navigate, explore and discover the complex and interconnected world of idea, inventions and big business.”

Once you click through please note to adjust the date in the upper right corner to observe earlier time periods. Also, for additional information and/or documentation click the “about this site” in the upper right corner. Enjoy!

HarambeeNet @ Duke Computer Science

We enjoyed today’s discussion at the Harambeenet Conference here in the Duke Computer Science Department. The conference is centered upon network science and computer science education. It features lots of interdisciplinary scholarship and applications of computer science techniques in novel domains.

We are looking forward to an interesting final day of discussion and hope to participate in allied future conferences.

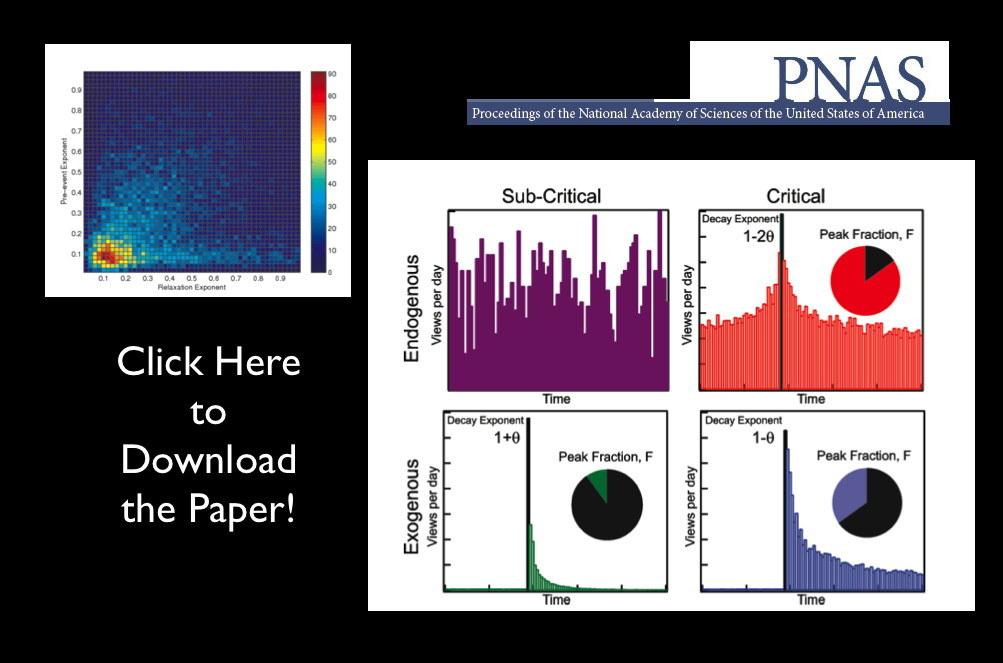

YouTube Research — Robust Dynamic Classes Revealed by Measuring the Response Function of a Social System

Here at the CSCS Lab, we are working hard to finish up some projects. In the meantime, we wanted to highlight one of our favorite articles, an article we previously highlighted on the blog. Some of you might ask “what does this have to do with law or social science?” (1) We believe the taxonomy outlined in this article could potentially be applied to a wide set of social phenomena (2) As we say around here, if you are not reading outside your discipline, you are far less likely to be able to innovate within your discipline. So we suggest you consider downloading this paper….

Computational Social Science in Science Magazine [Repost from 3/22]

This article in a recent issue of Science Magazine — authored by some of the leaders in field including Gary King, James Fowler, David Lazer, Albert-László Barabási, Lada Adamic as well as several others — highlights some of the possibilities of and perils associated with a computational revolution in the social sciences. We believe it is a worthwhile read….

How Python can Turn the Internet into your Dataset: Part 1

As we covered earlier, Drew Conway over at Zero Intelligence Agents has gotten off to a great start with his first two tutorials on collecting and managing web data with Python. However, critics of such automated collection might argue that the cost of writing and maintaining this code is higher than the return for small datasets. Furthermore, someone still needs to manually enter the players of interest for this code to work.

To convince these remaining skeptics, I decided to put together an example where automated collection is clearly the winner.

Problem: Imagine you wanted to compare Drew’s NY Giants draft picks with the league as a whole. How would you go about obtaining data on the rest of the league’s players?

Human Solution: If you planned to do this the old-fashioned manual way, you would probably decide to collect the player data team-by-team. On the NFL.com website, the first step would thus be to find the list of team rosters:

http://www.nfl.com/players/search?category=team&playerType=current

Now, you’d need to click through to each team’s roster. For instance, if you’re from Ann Arbor, you might be a Lion’s fan…

http://www.nfl.com/players/search?category=team&filter=1540&playerType=current

This is the list of current players for Detroit Lions. In order to collect the desired player info, however, you’d again have follow the link to each player’s profile page. For instance, you might want to check out the Lion’s own first round pick:

http://www.nfl.com/players/matthewstafford/profile?id=STA134157

At last, you can copy down Stafford’s statistics. Simple enough, right? This might take all of 30 seconds with page load times and your spreadsheet entry.

The Lions have more than 70 players rostered (more than just active players); let’s assume this is representative. There are 32 teams in the NFL. By even a conservative estimate, there are over 2000 players you’d need to collect data. If each of the 2000 players took 30 seconds, you’d need about 17 man hours to collect the data. You might hand this data entry over to a team of bored undergrads or graduate students, but then you’d need to worry about double-coding and cost of labor. Furthermore, what if you wanted to extend this analysis to historical players as well? You better start looking for a source of funding…

What if there was an easier way?

Python Solution:

The solution requires just 100 lines of code. An experienced Python programmer can produce this kind of code in half an hour over a beer at a place like Ashley’s. The program itself can download the entire data set in less than half an hour. In total, this data set is the product of less than an hour of total time.

How long would it take your team of undergrads? Think about all the paperwork, explanations, formatting problems, delays, and cost…

The end result is a spreadsheet with the name, weight, age, height in inches, college, and NFL team for 2,520 players. This isn’t even the full list – for the purpose of this tutorial, players with missing data, e.g., unknown height, are not recorded.

You can view the spreadsheet here. In upcoming tutorials, I’ll cover how to visualize and analyze this data in both standard statistical models as well as network models.

In the meantime, think about which of these two solutions makes for a better world.

How to Use Python to Collect Data from the Web [From Drew Conway]

We wanted to highlight a couple of very interesting posts by Drew Conway of Zero Intelligence Agents. While not simple, the programming language python offers significant returns upon investment. From a data acquisition standpoint, python has made what seemed impossible quite possible. As a side note, this code looks like our first Bommarito led Ann Arbor Python Club effort to download and process NBA Box Scores…. you know it is all about trying to win the fantasy league…!

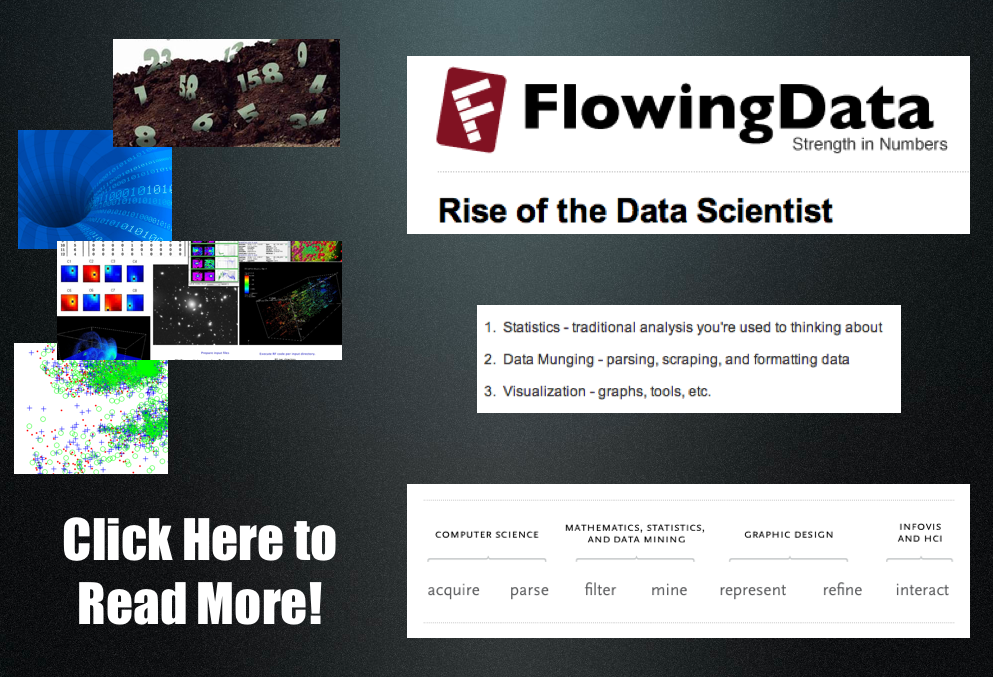

The Rise of the Data Scientist [From Flowing Data]

Earlier in the month, there was a very interesting discussion over at Flowing Data entitled the Rise of the Data Scientist. We decided to highlight it in this post because it raises important issues regarding the relationship between Computational Legal Studies and other movements within law.

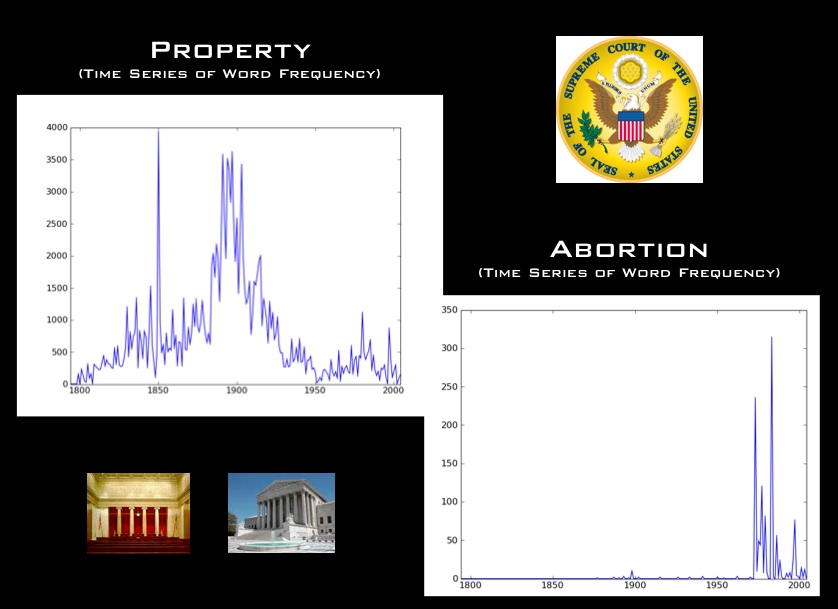

As we consider ourselves empiricists, we are strong supporters of the Empirical Legal Studies movement. For those not familiar, the vast majority of existing Empirical Legal studies employ the use of econometric techniques. For some substantive questions, these approaches are perfectly appropriate. While for others, we believe techniques such as network analysis, computational linguistics, etc. are better suited. Even when appropriately employed, as displayed above, we believe the use of traditional statistical approaches should be seen as nested within a larger process. Namely, for a certain class of substantive questions, there exists tremendous amounts of readily available data. Thus, on the front end, the use of computer science techniques such as web scraping and text parsing could help unlock existing large-N data sources thereby improving the quality of inferences collectively produced. On the back end, the use of various methods of information visualization could democratize the scholarship by making the key insights available to a much wider audience.

It is worth noting that our commitment to Computational Legal Studies actually embraces a second important prong. From a mathematical modeling/formal theory perspective, at least for a certain range of questions, agent based models/computational models ≥ closed form analytical models. In other words, we are concerned that many paper & pencil game theoretic models fail to incorporate interactions between components or the underlying heterogeneity of agents. Alternatively, they demonstrate the existence of a P* without concern of whether such an equilibrium is obtained on a timescale of interest. In some instances, these complications do not necessarily matter but in other cases they are deeply consequential.

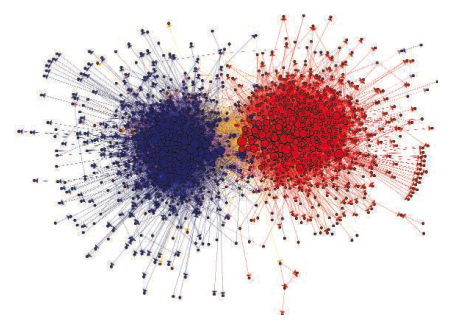

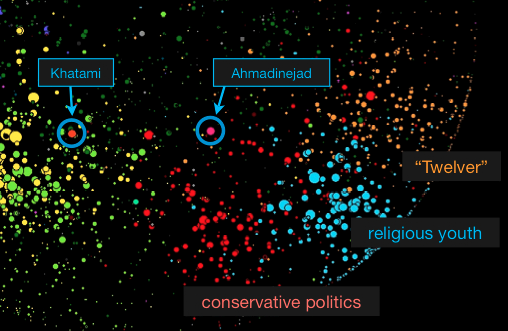

Iranian Blogosphere: Followup from Harvard NIPS 2009

We genuinely enjoyed our trip to Boston for the Networks in Political Science 2009 Conference at Harvard. There were many highlights but given the timely nature of their work we wanted to highlight the presentation by John Kelly & Bruce Etling entitled Mapping Culture, Politics, and Religion in the Arabic Blogosphere. This is a followup to last year’s presentation, Mapping Iran’s Online Public: Politics and Culture in the Persian Blogosphere. As usual, the folks at the Berkman Center are doing great work. Check out today’s New York Times featuring an article entitled Iranian Blogosphere Tests Government’s Limits.

Data.gov is Now Online

Important thing worth noting … data.gov went online during our break …. From the front page “The purpose of Data.gov is to increase public access to high value, machine readable datasets generated by the Executive Branch of the Federal Government. Although the initial launch of Data.gov provides a limited portion of the rich variety of Federal datasets presently available, we invite you to actively participate in shaping the future of Data.gov by suggesting additional datasets and site enhancements to provide seamless access and use of your Federal data. Visit today with us, but come back often. With your help, Data.gov will continue to grow and change in the weeks, months, and years ahead.”

Computer Programming and the Law — OR — How I Learned to Learn Live with Python and Leverage Developments in Information Science

One of our very first posts highlighted a recent article in Science Magazine describing the possibilities of and perils associated with a computational revolution in the social sciences. A very timely article by Paul Ohm (UC-Boulder Law School) entitled Computer Programming and the Law: A New Research Agenda represents the legal studies analog the science magazine article. From information retrieval to analysis to visualization, we believe this article outlines the Computational Legal Studies playbook in a very accessable manner.

Prior to founding this blog, we had little doubt that developments in informatics and the science associated with Web 2.0 would benefit the production of a wide class of theoretical and empirical legal scholarship. In order to lower the costs to collective action and generate a forum for interested scholars, we believed it would be useful to produce the Computational Legal Studies Blog. The early results have been very satisfying. For example, it has helped us link to the work of Paul Ohm.

For those interested in learning more about not only the potential benefits of a computational revolution in legal science but also some of the relevant mechanics, we strongly suggest you consider giving his new article a read!