Tag: computational legal studies

Big Data: The Next Frontier for Innovation, Competition and Productivity [Via McKinsey Global Institute]

There is growing interest in “Big Data” – both within the academy and within the private sector. For example, consider several major review articles on the topic including “Big Data” from Nature, “The Data Deluge” from The Economist and “Dealing with Data” from Science.

Indeed, those interested should consult the proceedings/video from recent conferences such as Princeton CITP Big Data 2010, (where I presented on the Big Data and Law panel) GigaOM 2011 NYC, O’Reilly Strata 2011 Making Data Work Conference, etc. Summarizing some of these insights and providing new insights is a new report for the McKinsey Global Institute entitled Big Data: The Next Frontier for Innovation, Competition and Productivity. This report was the subject of a recent NY Times article New Ways to Exploit Raw Data May Bring Surge of Innovation, a Study Says. Here is one highlight from this article “McKinsey says the nation will also need 1.5 million more data-literate managers, whether retrained or hired. The report points to the need for a sweeping change in business to adapt a new way of managing and making decisions that relies more on data analysis. Managers, according to the McKinsey researchers, must grasp the principles of data analytics and be able to ask the right questions.”

Of course, here at Computational Legal Studies, we are interested in the potential of a Big Data revolution in both legal practice and in the scientific study of law and legal institutions. Several recent articles on the subject argue that a major reordering is — well — already underway. For example, Law’s Information Revolution (By Bruce H. Kobayashi & Larry Ribstein), The Practice of Law in the Era of ‘Big Data’ (By Nolan M. Goldberg and Micah W. Miller) and Computer Programming and the Law: A New Research Agenda (By Paul Ohm) highlight different elements of the broader question.

We hope to share additional thoughts on this topic in the months to come. In the meantime, I would highlight the slides from my recent presentation at the NELIC Conference at Berkeley Law. My brief talk was entitled Quantitative Legal Prediction and it is a preview of some of my thoughts on the changing market for legal services. Please stay tuned.

Bommarito, Katz & Isaacs-See –> Virginia Tax Review [ Online Supplement and Datasets ]

Our paper An Empirical Survey of the Population of United States Tax Court Written Decisions was recently published in the Virginia Tax Review. We have just placed supplementary materials online (click here or above to access).

Simply put, our paper is a “dataset paper.” While common in the social and physical sciences, there are far fewer (actually borderline zero) “dataset papers” in legal studies.

In our estimation, the goals of a “dataset paper” are three fold:

- (1) Introduce the data collection process with specific emphasis upon why the collection method was able to identify the targeted population

- (2) Highlight some questions that might be considered using this and other datasets

- (3) Make the data set available to various applied scholars who might like to use it

As subfields such as empirical legal studies mature (and in turn legal studies starts to look more like other scientific disciplines) it would be reasonable to expect to see additional papers of this variety. With the publication of the online supplement, we believe our paper has achieved each of these goals. Whether our efforts prove useful for others — well — only time will tell!

My Slides from New and Emerging Legal Infrastructures Conference (NELIC) [ @ Berkeley Law ]

UPDATED SLIDES – Midwest Law & Econ Association – Indiana Law – Sept 2011

The Future of Computational Social Science @ University of Washington – Submissions Due Jan. 30, 2011

As a member of the conference program committee, I would like encourage you to consider submitting a paper to “The Future of Computational Social Science” at the University of Washington – Seattle, May 16-17, 2011. The conference aims to bring together leaders from industry and the academy to present cutting edge work. For those who might be interested in attending, please click on the image above or here to access the call for papers. Here is an excerpt:

As a member of the conference program committee, I would like encourage you to consider submitting a paper to “The Future of Computational Social Science” at the University of Washington – Seattle, May 16-17, 2011. The conference aims to bring together leaders from industry and the academy to present cutting edge work. For those who might be interested in attending, please click on the image above or here to access the call for papers. Here is an excerpt:

“Computational social science is an emergent field and source of new theoretical and methodological innovation for social science more broadly. Multidisciplinary teams of social and computer scientists are increasingly common in the lab and at workshops where cross-fertilization occurs in the areas of theory, data, methods, and tools. Peer-reviewed interdisciplinary work is becoming more common as the computational tools and techniques of computer science are being used by social scientists. Previously, large-scale computational processing was the purview of expensive, university-centric computing labs. Now, with the democratization of technology, universities and for-profit firms increasingly provide large amounts of inexpensive computing power to researchers and citizens alike.”

Authors are invited to prepare and submit to JITP a research paper, policy viewpoint, workbench note, or teaching innovation manuscript by January 30, 2011. There is also an option to submit an abstract for potential inclusion in the poster session. The poster abstract is also due January 30, 2011.

Law as a Complex Adaptive System: An Updated Reading List / Syllabus

As a new semester is here at Michigan CSCS, I have made several revisions to the content of our global reading list for the Computational Legal Studies Working Group. The content of this interdisciplinary reading list features work from economics, physics, sociology, biology, computer science, political science, public policy, theoretical and empirical legal studies and applied math. I wanted to highlight this reading list for anyone who is interesting in learning more about the state of the literature in this interdisciplinary space. Also, for those interested in learning model implementation, please consult my my slides from the 2010 ICPSR Course Introduction to Computing for Complex Systems. Feel free to email me if you have any questions.

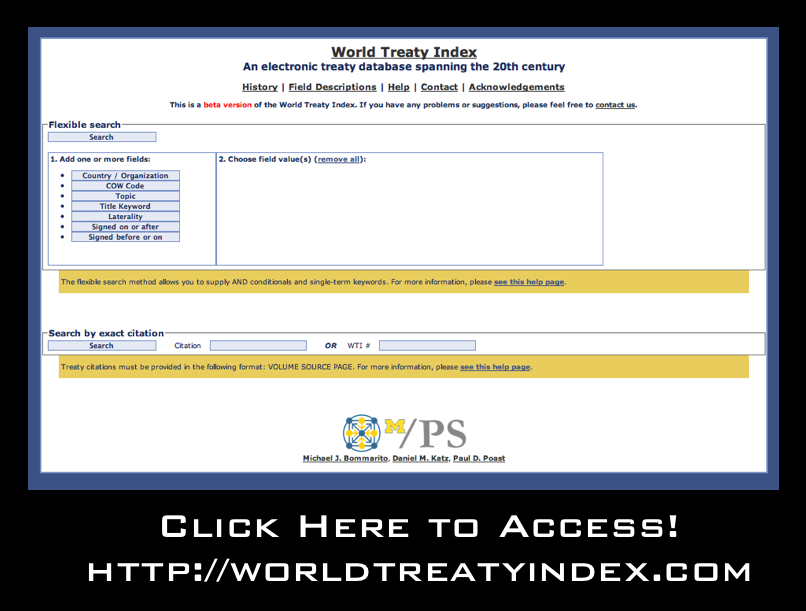

Announcing the Beta Prerelease of the New Electronic World Treaty Index

What is the World Treaty Index?

The World Treaty Index (WTI), originally compiled by Peter Rohn in the 1960s and 1970s and subsequently maintained and updated at the University of Washington, is a comprehensive list of all known treaties formed during the twentieth century. This includes not only treaties formally registered with the United Nations (UNTS) but a significant number of unregistered agreements.

What information does the World Treaty Index provide?

The WTI provides information on the parties to the agreement, the general topic of the agreement (e.g. trade agreement, tax agreement, an arms control agreement, etc.), as well as the signing date and the date in force, and the volume and page containing the text of the agreement. Though the WTI does not provide the full text of each agreement, it is an excellent resource for identifying when a state (or states) formed a number of international agreements of a particular type. With a list of relevant agreements (including their volume and page number), an end user interested in obtaining the full text can simply collect them using the primary source material (i.e. UNTS, LTS, etc.)

Who is currently administrating the World Treaty Index?

The Electronic WTI is now housed at the University of Michigan and administrated by Michael Bommarito, Daniel Martin Katz and Paul Poast. We are highlighting the newly constructedBeta Prerelease of the WTI website in an effort to obtain feedback prior to the official release. The currently available product provides access to information on more than 58,000bilateral and multilateral treaties formed between 1900 and 1997. When full coverage for the 20th century is complete, the database should feature in excess of 70,000 agreements.

What are some examples of searches I can conduct on the World Treaty Index website?

While the WTI should support all browsers, we suggest using Firefox. Below are three sample searches.

Search #1: Suppose a user would like to collect all agreements involving Brazil. Use the “flexible search” and follow three easy steps. (A) Select the country/organization field (B) within the country/organization field set the field value = Brazil (C) click the search button.

Search #2: Suppose a user would like to collect all agreements between Mexico and Spain. Use the “flexible search” and follow five easy steps. (A) Select the country/organization field (B) within the country/organization field set the field value = Mexico (C) Select a second country/organization field (B) within this new country/organization field set the field value = Spain (E) now click the search button.

Search #3: Suppose a user would like to know how many extradition agreements France signed between 1950 and 1962. This is similar to the examples above but involves the topic, signed on or after and signed before or on fields. After the user chooses the proper search fields and selects the search information, the WTI will produce on the screen a list of the desired agreements and provide the option of downloading the list as a CSV file.

When will it feature full coverage for the entire 20th Century?

By the end of 2010, we will add (1) all bilateral treaties formed between 1900 and 1944 [Now Mostly Done] (2) all bilateral treaties formed between 1998 and 1999, and (3) all multilateral agreements and a list of all parties to each multilateral agreement. If you know of an agreement that is not ultimately featured on the site please contact us and we will be happy to add it to the list.

How can I learn more about the World Treaty Index?

For a general history of the World Treaty Index, visit the “History Page” on the worldtreatyindex.com website. For a more detailed treatment please see: Glenda Pearson, Rohn’s World Treaty Index: Its Past and Future, 29 International Journal of Legal Information 543 (2001).

What additional extensions of the Electronic World Treaty Index are planned?

As noted above, our initial goal is provide complete coverage of all known agreements in the 20th Century. Planned extensions include bringing the World Treaty forward so as to provide coverage up to 2010. In addition, we plan to collect information regarding treaty terminations. Finally, we would like to enhance the granularity of our topic codes and allow for agreements with multiple dimensions to feature multiple topic codes.

Feedback?

At this point, we have only offered a beta pre-release of the site. Thus, we would really appreciate your feedback, etc. Please email us at worldtreatyindex@gmail.com if you have any thoughts about how to improve the site.

Measuring the Complexity of the Law : The United States Code

Understanding the sources of complexity in legal systems is a matter long considered by legal commentators. In tackling the question, scholars have applied various approaches including descriptive, theoretical and, in some cases, empirical analysis. The list is long but would certainly include work such as Long & Swingen (1987), Schuck (1992), White (1992), Kaplow (1995), Epstein (1997), Kades (1997), Wright (2000) and Holz (2007). Notwithstanding the significant contributions made by these and other scholars, we argue that an extensive empirical inquiry into the complexity of the law still remains to be undertaken.

While certainly just a slice of the broader legal universe, the United States Code represents a substantively important body of law familiar to both legal scholars and laypersons. In published form, the Code spans many volumes. Those volumes feature hundreds of thousands of provisions and tens of millions of words. The United States Code is obviously complicated, however, measuring its size and complexity has proven be non-trivial.

In our paper entitled, A Mathematical Approach to the Study of the United States Code we hope to contribute to the effort by formalizing the United States Code as a mathematical object with a hierarchical structure, a citation network and an associated text function that projects language onto specific vertices.

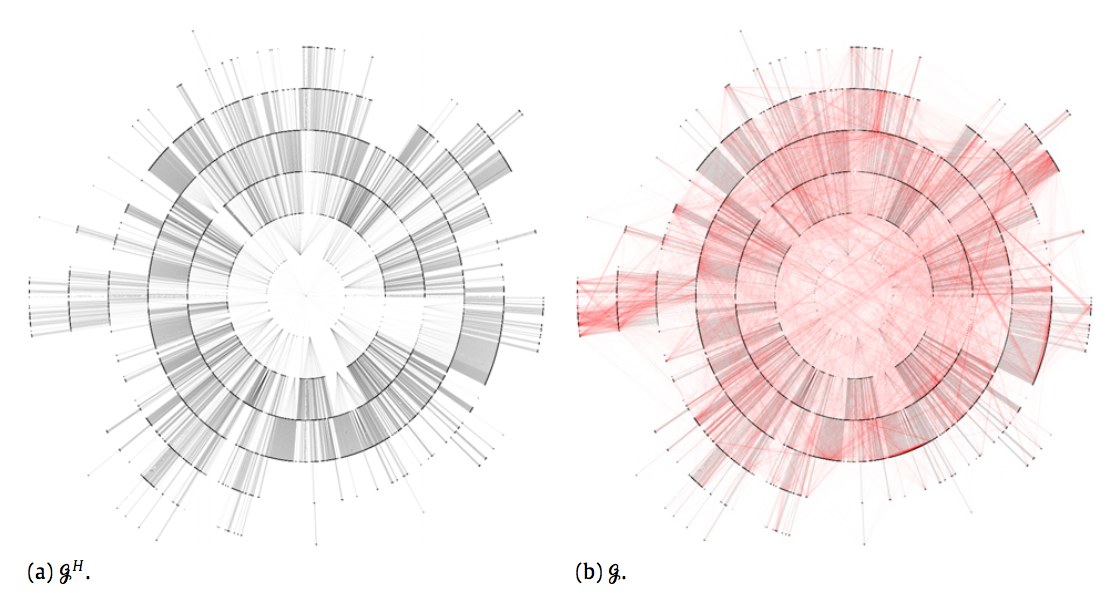

In the visualization above, Figure (a) is the full United States Code visualized to the section level. In other words, each ring is a layer of a hierarchical tree that halts at the section level. Of course, many sections feature a variety of nested sub-sections, etc. For example, the well known 26 U.S.C. 501(c)(3) is only shown above at the depth of Section 501. If we added all of these layers there would simply be additional rings. For those interested in the visualization of specific Titles of the United States Code … we have previously created fully zoomable visualizations of Title 17 (Copyright), Title 11 (Bankruptcy), Title 26 (Tax) [at section depth], Title 26 (Tax) [Capital Gains & Losses] as well as specific pieces of legislation such as the original Health Care Bill — HR 3962.

In the visualization above, Figure (b) combines this hierarchical structure together with a citation network. We have previously visualized the United States Code citation network and have a working paper entitled Properties of the United States Code Citation Network. Figure (b) is thus a realization of the full United States Code through the section level.

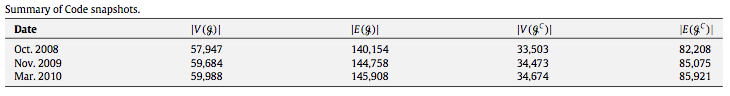

With this representation in place, it is possible to measure the size of the Code using its various structural features such as vertices V and its edges E. It is possible to measure the full Code at various time snapshots and consider whether the Code is growing or shrinking. Using a limited window of data, we observe growth not only in the size of the code but also its network of dependancies (i.e. its citation network).

Of course, growth in the size United States Code alone is not necessarily analogous to an increase in complexity. Indeed, while we believe in general the size of the code tends to contribute to “complexity,” some additional measures are needed. Thus, our paper features structural measurements such as number of sections, section sizes, etc.

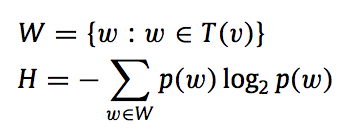

In addition, we apply the well known Shannon Entropy measure (borrowed from Information Theory) to evaluate the “complexity” of the message passing / language contained therein. Shannon Entropy has a long intellectual history and has been used as a measure of complexity by many scholars. Here is the formula for Shannon entropy:

For those interested in reviewing the full paper, it is forthcoming in Physica A: Statistical Mechanics and its Applications. For those not familiar, Physica A is a journal published by Elsevier and is a popular outlet for Econophysics and Quantitative Finance. A current draft of the paper is available on the SSRN and the physics arXiv

We are currently working on a follow up paper that is longer, more detailed and designed for a general audience. Even if you have little or no interest in the analysis of the United States Code, we hope principles such as entropy, structure, etc. will prove useful in the measurement of other classes of legal documents including contracts, treaties, administrative regulations, etc.