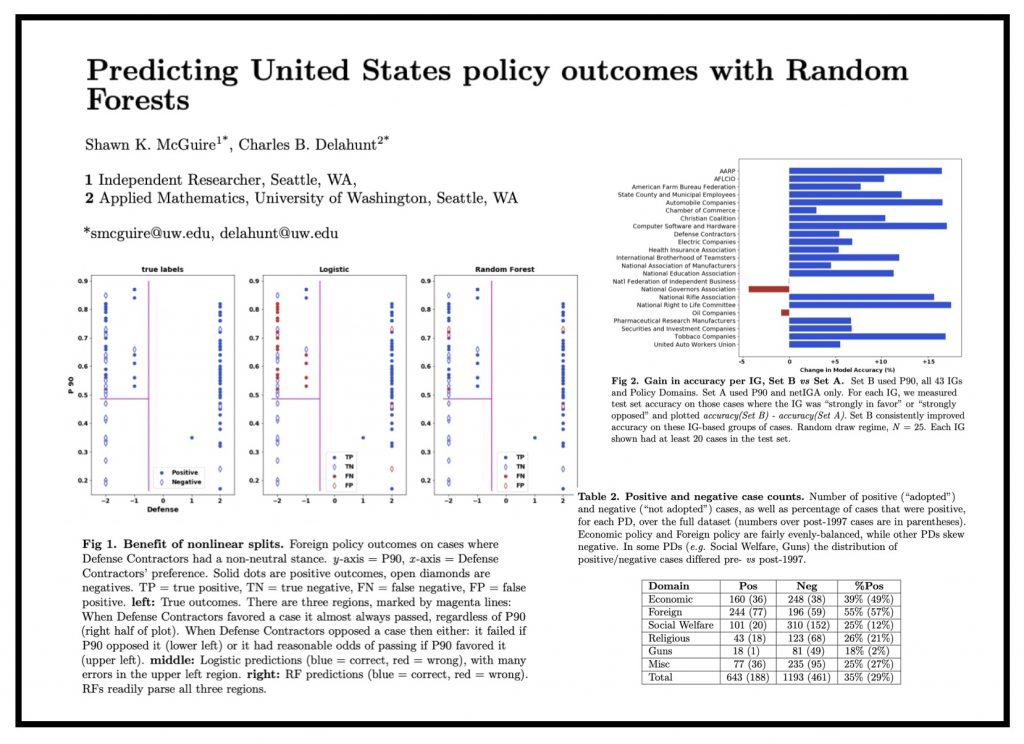

Interesting paper which follows on to a number of Machine Learning / NLP driven Legislative Prediction or Government Prediction papers. Access the draft of paper from arXiv.

For more examples, see e.g. the follow papers —

Gerrish SM, Blei DM. “Predicting legislative roll calls from text”. ICML, 2011.

Yano T, Smith NA, Wilkerson JD. “Textual Predictors of Bill Survival in Congressional Committees”. Proc 2012 Conf N Amer Chapter Assoc Comp Linguistics, Human Language Technologies, 2012.

Katz DM, Bommarito MJ, Blackman J. “A general approach for predicting the

behavior of the Supreme Court of the United States”. PLOS One, 2017.

Nay, J. “Predicting and Understanding Law Making with Word Vectors and an Ensemble Model.” PLOS One, 2017.

Waltl, Bernhard Ernst. “Semantic Analysis and Computational Modeling of Legal Documents.” PhD diss., Technische Universität München, 2018.

Davoodi, Maryam, Eric Waltenburg, and Dan Goldwasser. “Understanding the Language of Political Agreement and Disagreement in Legislative Texts.” In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 5358-5368. 2020.