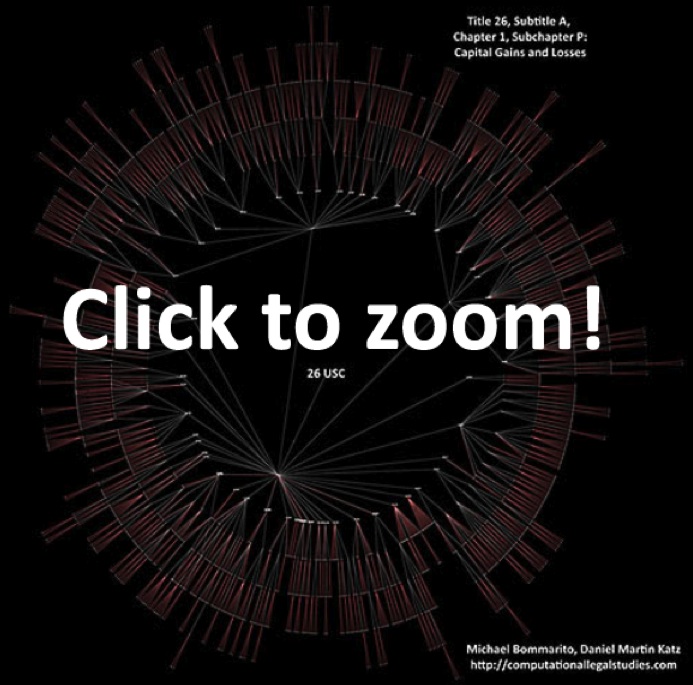

In our original post on Title 26, we displayed every subtitle of Title 26, A through K, down to the section level. We did not, however, display the elements of the code below the section level. For example, our prior visualization stops at §501 and does not distinguish between §501(c)(3) and §501(c)(2). This is a function of the size of Title 26, as the full labeled image is too large to render and distribute over the Internet. However, working with only a subset of Title 26 of the United States Code, we can provide a full depth zoomable visualization.

We’ve chosen to highlight – Subtitle A, Chapter 1, Subchapter P: Capital Gains and Losses as this section well represents the structural complexity of the code. Despite only displaying a single subchapter without any Treasury Regs or SEC guidance, there are over 1,711 citable elements in this visual. If you look closely you can see the labels for each and every piece of this subchapter, including §1221(b)(2)(A)(ii), which defines “hedging transactions” (e.g., swaps) for the purpose of the tax code: “to manage risk of interest rate or price changes or currency fluctuations with respect to borrowings made or to be made, or ordinary obligations incurred or to be incurred, by the taxpayer.” For details on the coloring of this visual, see our previous post visualizing the Bankruptcy Code 11 U.S.C located here.