Access article here — whether or not this is precisely accurate – any measure that says The Law Lab @ Chicago Kent is #1 in Legal Innovation has to be correct 🙂

Tag: legal tech

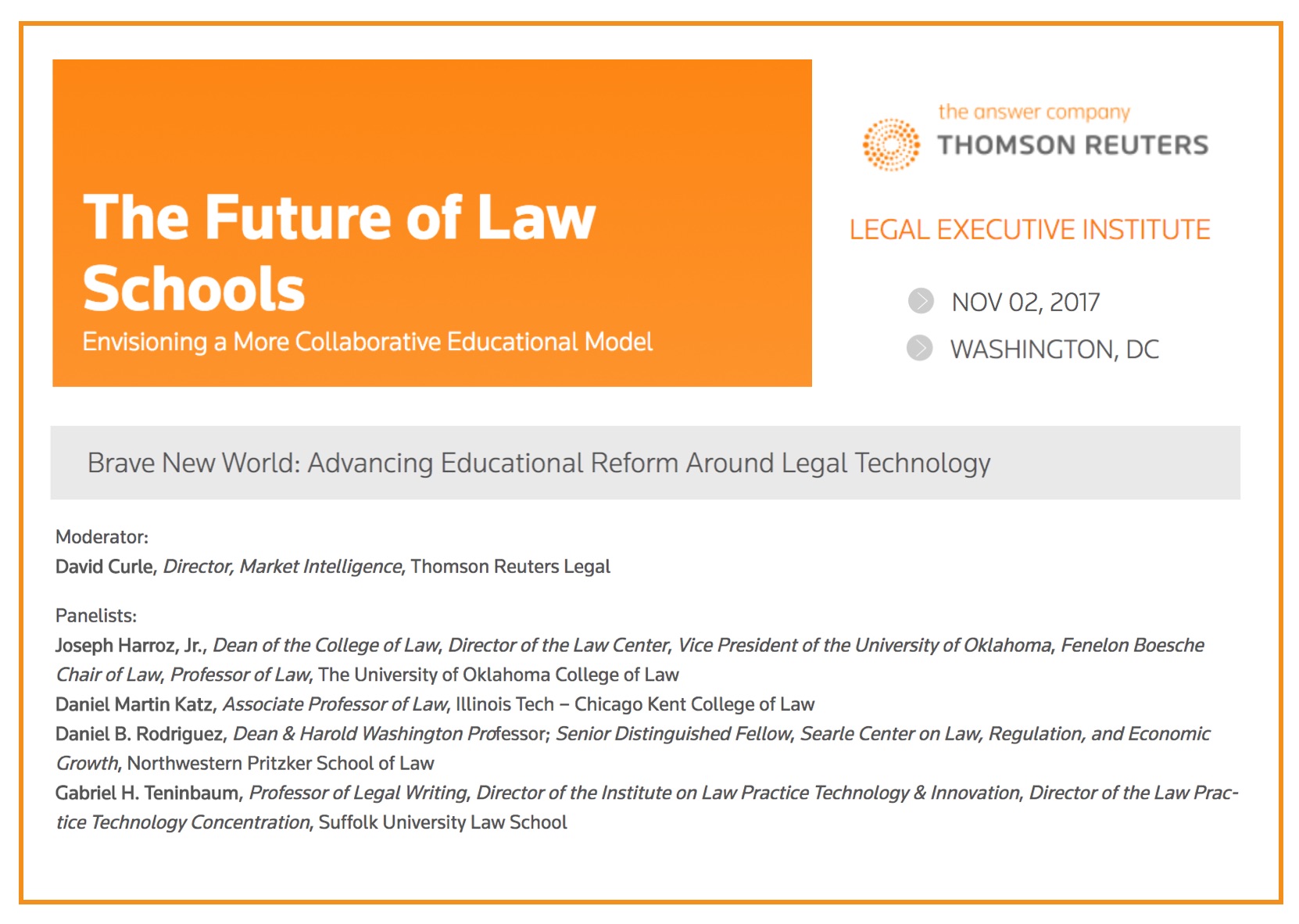

The Future of Law Schools Conference – Organized by the Thomson Reuters Legal Executive Institute

Tomorrow I will be speaking at The Future of Law Schools Conference – Organized by the Thomson Reuters Legal Executive Institute.

Here is our panel which kicks off the afternoon –

Moderator:

David Curle, Director, Market Intelligence, Thomson Reuters Legal

Panelists:

Daniel B. Rodriguez, Dean @ Northwestern Pritzker School of Law

Joseph Harroz, Dean @ University of Oklahoma

Daniel Martin Katz, Assoc. Prof @ Illinois Tech – Chicago Kent Law

Gabriel H. Teninbaum, Professor @ Suffolk University Law School

Tanina Rostain, Professor @ Georgetown Law

Daniel Katz Inducted in the College of Law Practice Management – COLPM 2017

Last night – I was inducted into the College of Law Practice Management – it is a real honor to join an amazing class of inductees and current fellows ! #COLPM

Futures Conference and Induction of Fellows – The College of Law Practice Management (COLPM)

Very excited to be here for the Futures Conference of the College of Law Practice Management. Tonight I will be induced as as Fellow of the College (along with a range of other inductees).

10 Legal-Tech Lessons from Dollars to Doughnuts at Fin (Legal) Tech (via ABA Journal)

See coverage of our conference here

See coverage of our conference here

Delivering the Thursday Keynote @ The Legal Week Strategic Technology Forum USA 2017

Tomorrow I will deliver the Thursday Keynote at the Legal Week Strategic Technology Forum in San Diego. I look forward to connecting with old friends and making new friends at the event!

Tomorrow I will deliver the Thursday Keynote at the Legal Week Strategic Technology Forum in San Diego. I look forward to connecting with old friends and making new friends at the event!

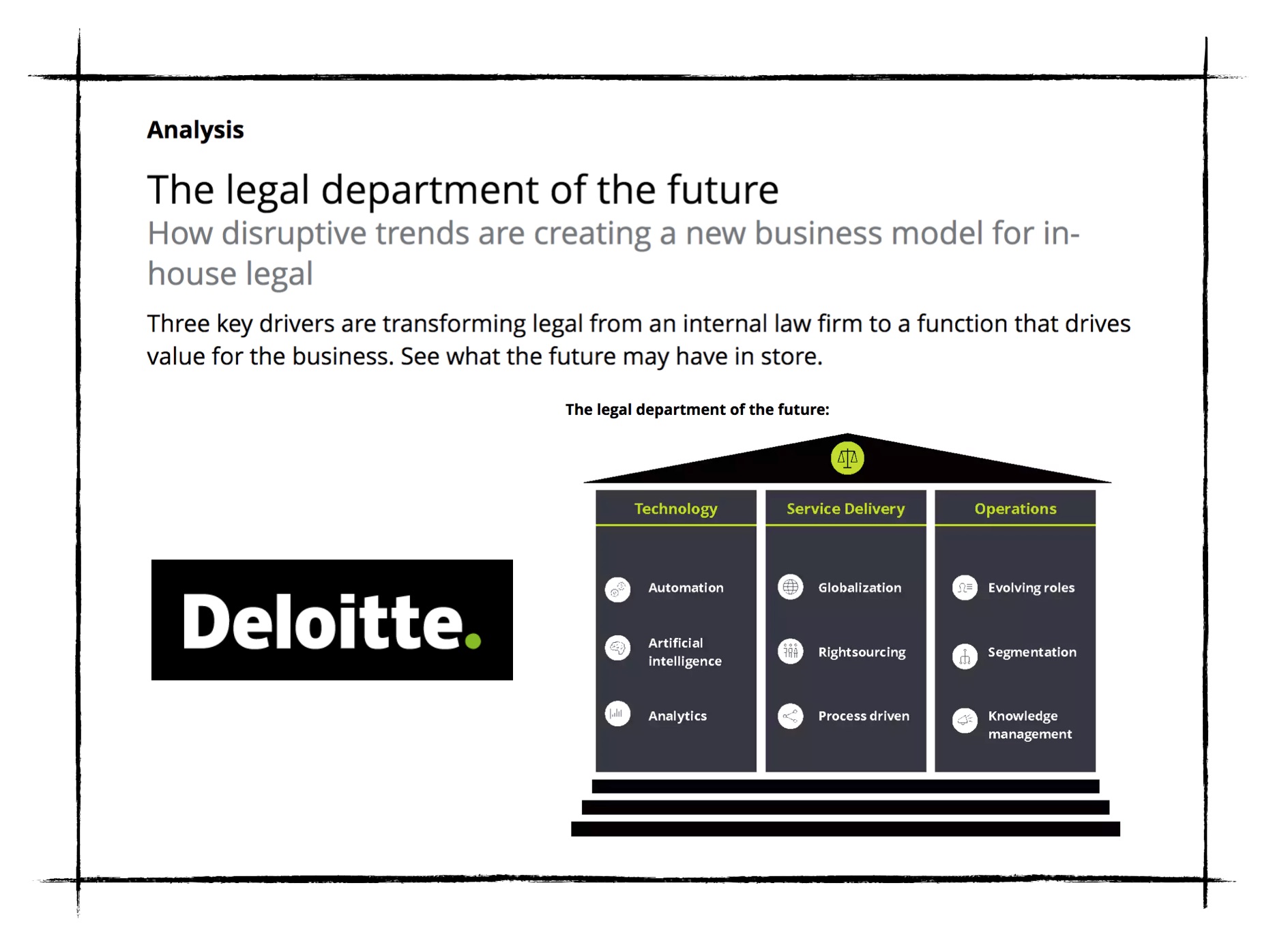

The Legal Department of the Future (via Deloitte)

All of these trends are well documented … so no new ground here … but interesting to see this surface …

Honored to Deliver the Keynote Address at the NALP Summit on Emerging Careers for Law Grads

Honored to deliver the keynote at yesterday’s NALP Summit on Emerging Careers for Law Grads

Cassels Brock Holds Inaugural Legal Innovation Forum – Toronto

Thanks to Marlon Hylton and the rest of the team from Cassels Brock for inviting me to keynote their inaugural Legal Innovation Forum !

Thanks to Marlon Hylton and the rest of the team from Cassels Brock for inviting me to keynote their inaugural Legal Innovation Forum !

Demo Night at Frankfurt Legal Tech for ContraxSuite

Last week we had the pleasure of offering a demo at the Frankfurt Legal Technology and Innovation Meetup – Demo Night. We gave a remote demo of ContraxSuite (our contracts / legal document analytics platform).

Draft Agenda for the 2017 Fin (Legal) Tech Conference is Now Live

The Draft Agenda for the 2017 Fin (Legal) Tech Conference is Now Live –One Stage, No Panels, 20+ Speakers in 1 Day with More Speakers to Be Announced Soon. Sign up for a FREE Ticket Today — See you on October 19, 2017 in Chicago!

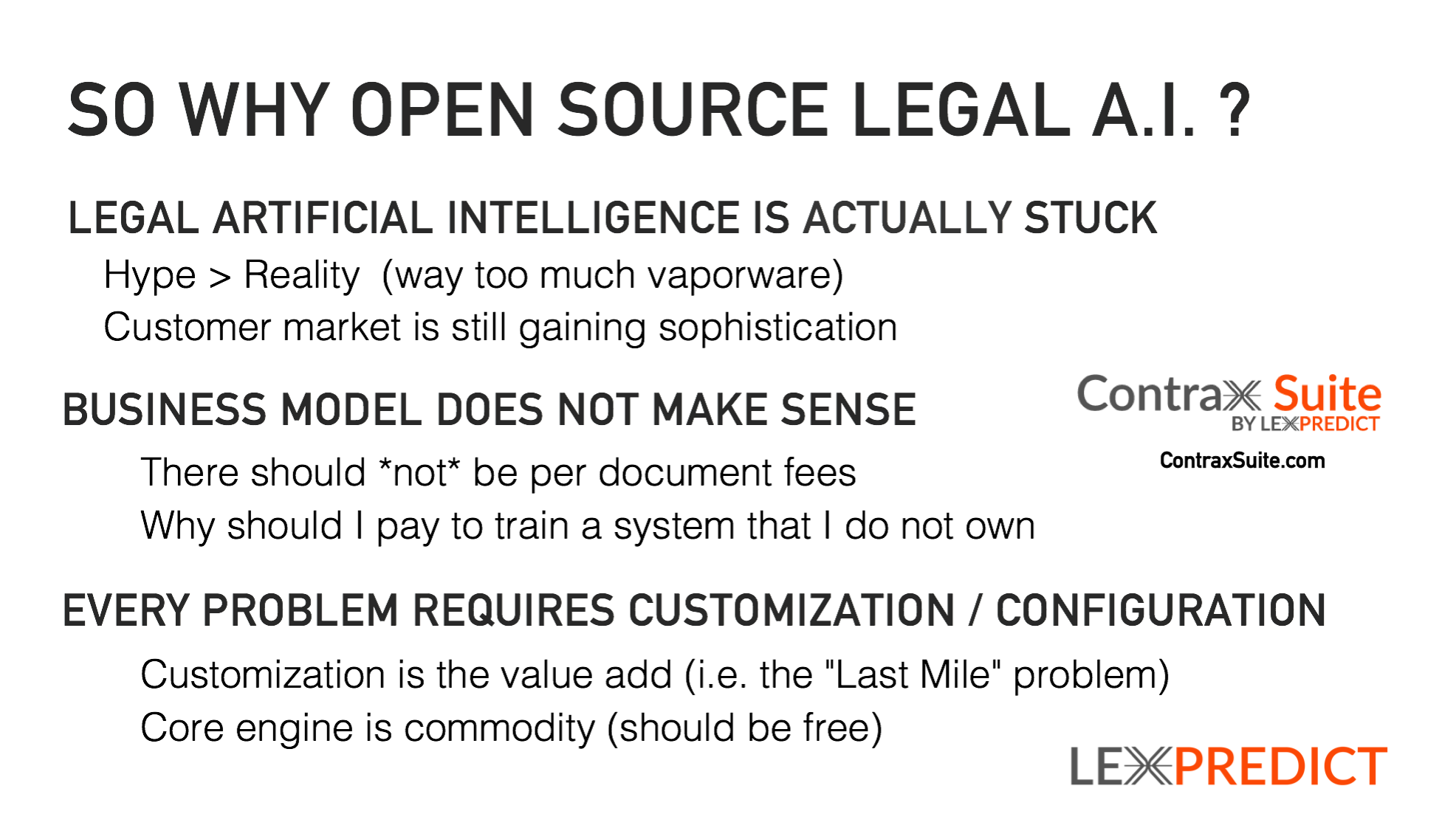

Why Open Source Artificial Intelligence in Legal Tech ?

On August 1, we released Contrax Suite (an open source document analytics platform). It is important to note that we have decided upon dual licensing – (1) open source (AGPL) which is pretty hard core copyleft and (2) a more permissive license in specific circumstances. The key for us is to maintain the opensource ecosystem which requires balancing competing interests. We cannot grant the more permissive license to everyone under all conditions or it undermines the entire effort.

That said, we have a real problems in the A.I. + Law community. Some of the claims are outlandish and the business model (at its core) does not really make sense. We think that opensource helps solve for some (perhaps not all) of the adoption issues.