Tag: computational social science

Introduction to Computing for Complex Systems — ICPSR 2010 — My Full Course Slides Available Online!

I am going to bump this post to front of the blog one last time as there has been some interest in this material. It has now been several weeks since we completed the full four week class here at the ICPSR Program in Quantitative Methods. In this course, I (together with my colleagues) highlight the methods of complex systems as well as several environments designed to explore the field. These include Netlogo (agent based models and network models), Vensim (system dynamics / ecological modeling) and Pajek (empirical network analysis). In the final week, we cover a variety of advanced topics:

- (a) Community Detection in Networks

- (b) Computational Linguistics / Natural Language Processing

- (c) Diffusion Models and Mathematical Modeling with Data

- (d) Exponential Random Graph (p*) Models

- (e) Information Retrieval / Webscraping

Although, we do not work with more advanced languages within the course, those who need to conduct complex analysis are directed to alternatives such as R, Python, Java, etc.

Anyway, the slides are designed to be fully self-contained and thus allow for individually paced study of the relevant material. If you work through the slides carefully you should be able to learn the software as well as many of the core principles associated with the science of complex systems. The material should be available online indefinitely. If you have questions, feel free to email me.

High Throughput Humanities – ECCS Satellite Conference (Lisboa 2010)

As we speak, I am currently in route to Portugal for the very exciting High Throughput Humanities meeting on Wednesday. As we believe it fits well within the goals of the meeting, I will briefly present our work on the United States Code (including a brief preview of our still unreleased new paper). Anyway, for those not familiar with the meeting, if you click on the image above you will be taken to the main page. Also, here is the announcement for the meeting:

“The High Throughput Humanities satellite event at ECCS’10 establishes a forum for high throughput approaches in the humanities and social sciences, within the framework of complex systems science. The symposium aims to go beyond massive data aquisition and to present results beyond what can be manually achieved by a single person or a small group. Bringing together scientists, researchers, and practitioners from relevant fields, the event will stimulate and facilitate discussion, spark collaboration, as well as connect approaches, methods, and ideas.

The main goal of the event is to present novel results based on analyses of Big Data (see NATURE special issue 2009), focusing on emergent complex properties and dynamics, which allow for new insights, applications, and services.

With the advent of the 21st century, increasing amounts of data from the domain of qualitative humanities and social science research have become available for quantitative analysis. Private enterprises (Google Books and Earth, Youtube, Flickr, Twitter, Freebase, IMDb, among others) as well as public and non-profit institutions (Europeana, Wikipedia, DBPedia, Project Gutenberg, WordNet, Perseus, etc) are in the process of collecting, digitizing, and structuring vast amounts of information, and creating technologies, applications, and services (Linked Open Data, Open Calais, Amazon’s Mechanical Turk, ReCaptcha, ManyEyes, etc), which are transforming the way we do research.

Utilizing a complex systems approach to harness these data, the contributors of this event aim to make headway into the territory of traditional humanities and social sciences, understanding history, arts, literature, and society on a global-, meso- and granular level, using computational methods to go beyond the limitations of the traditional researcher.”

Introduction to Computing for Complex Systems — ICPSR 2010 Slides for Self-Teaching Available Online!

This summer, I am teaching the Introduction to Computing for Complex Systems course at the ICPSR 2010 Program in Quantitative Methods. On the first day of class, I previously blogged about the course and thus if you are interested in additional details — feel free to consult the prior post.

This summer, I am teaching the Introduction to Computing for Complex Systems course at the ICPSR 2010 Program in Quantitative Methods. On the first day of class, I previously blogged about the course and thus if you are interested in additional details — feel free to consult the prior post.

For those interested in an introductory course that does not assume significant prior preparation, I did want to put up another post highlighting that all of course material (to date) is now freely available online. This includes course slides, model examples and assignments.

In this introductory course, I highlight several environments designed to explore the field of complex systems. These include Netlogo (agent based models and network models), Vensim (system dynamics / ecological modeling) and Pajek (empirical network analysis). Although, we do not work with more advanced languages within the course, those who need to conduct complex analysis are directed to alternatives such as R, Python, Java.

In the course slides, I offer a history of the science of complex systems and walk step-by-step through the process of model development and implementation. The slides and associated materials are designed to be a complete self-teaching environment where an individual who follows along carefully should be able to bring him or herself up to speed. More material will be added over the balance of the four-week session. So, please check back for more … including slides from our guest speakers!

“Agents of Change” — Agent Based Models and Methods [ Via The Economist ]

This week’s “economic focus” in the Economist highlights Agent Based Modeling as an alternative to traditional economic models and methods. As I am currently teaching Agent Based approaches to modeling as part of the ICPSR Introduction to Computing for Complex Systems, I am quite pleased to see this coverage. Indeed, the timing could not be better and I plan to highlight this article in the course!

Here are some highlights from the article: “… Agent-based modelling does not assume that the economy can achieve a settled equilibrium. No order or design is imposed on the economy from the top down. Unlike many models, ABMs are not populated with “representative agents”: identical traders, firms or households whose individual behaviour mirrors the economy as a whole. Rather, an ABM uses a bottom-up approach which assigns particular behavioural rules to each agent. For example, some may believe that prices reflect fundamentals whereas others may rely on empirical observations of past price trends. Crucially, agents’ behaviour may be determined (and altered) by direct interactions between them, whereas in conventional models interaction happens only indirectly through pricing. This feature of ABMs enables, for example, the copycat behaviour that leads to “herding” among investors. The agents may learn from experience or switch their strategies according to majority opinion. They can aggregate into institutional structures such as banks and firms …” For those who are interested, I have made similar points in the post “Complex Models for Dynamic Time Evolving Landscapes –or– Herb Gintis Offers a Strong Rebuke of “Meltdown.“

Complex Models for Dynamic Time Evolving Landscapes –or– Herb Gintis Offers a Strong Rebuke of “Meltdown” by Thomas Woods

As highlighted on Marginal Revolution, economist Herb Gintis has authored an Amazon.com review of the book “Meltdown: A Free-Market Look at Why the Stock Market Collapsed, the Economy Tanked, and Government Bailouts Will Make Things Worse” by Thomas E. Woods Jr. Suffice to say, the review is not flattering. Those interested in the direct attack on the book can read the full review here. Our particular interest in his review lies in the last third of the text where Professor Gintis highlights the genuine weaknesses of current macroeconomic theory. Below is the relevant text:

As highlighted on Marginal Revolution, economist Herb Gintis has authored an Amazon.com review of the book “Meltdown: A Free-Market Look at Why the Stock Market Collapsed, the Economy Tanked, and Government Bailouts Will Make Things Worse” by Thomas E. Woods Jr. Suffice to say, the review is not flattering. Those interested in the direct attack on the book can read the full review here. Our particular interest in his review lies in the last third of the text where Professor Gintis highlights the genuine weaknesses of current macroeconomic theory. Below is the relevant text:

“I am often asked why macroeconomic theory is in such an awful state. The answer is simple. The basic model of the market economy was laid out by Leon Walras in the 1870’s, and its equilibrium properties were well established by the mid-1960’s. However, no one has succeeded in establishing its dynamical properties out of equilibrium. But macroeconomic theory is about dynamics, not equilibrium, and hence macroeconomics has managed to subsist only by ignoring general equilibrium in favor of toy models with a few actors and a couple of goods. Macroeconomics exists today because we desperately need macro models for policy purposes, so we invent toy models with zero predictive value that allow us to tell reasonable policy stories, the cogency of which are based on historical experience, not theory.

I think it likely that macroeconomics will not become scientifically presentable until we realize that a market economy is a complex dynamic nonlinear system, and we start to use the techniques of complexity analysis to model it. I present my arguments in Herbert Gintis, “The Dynamics of General Equilibrium“, Economic Journal 117 (2007):1289-1309.

While we do not necessarily agree with every point made in his review, the general thrust of the above argument is directly in line with the thinking of many here at the Center for the Study of Complex Systems. Indeed, the rebuke offered above could be extended and applied to other work in Economics and Political Science. A significant part of the problem is that the analytical apparatus in question is simply not up to the complexity of the relevant problems. Most of the current approaches derive from an era when a CPU had a transistor count of less than 10k and memory was exceedingly expensive. It is not as though leading scholars of the day were completely unaware that most systems are far more intricate than a “few actors and a few goods.” However, tractability concerns created a strong incentive to develop models which could be solved analytically.

Moderately high-end machines now have transistor counts of greater than 2,000,000,000 and memory is incredibly cheap (see generally Moore’s Law). No need to impose fixed point equilibrium assumptions when there is no qualitative justification for eliminating the possibility that limit cycle attractors, strange attractors or some class of dynamics are, in fact, the genuine dynamics of the system. We have previously highlighted the press release “What Computer Science Can Teach Economics“ (and other social sciences). This is really important work. However, it is really only the beginning.

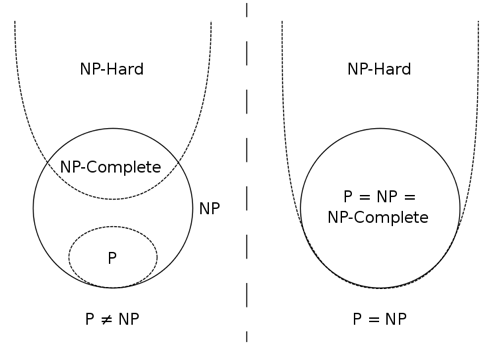

More realistic representations of these complex systems are possible, however, it requires scholars to consider jettisoning analytical approaches/solutions. When modeling complex adaptive systems far more granularity is possible but this requires a direct consideration of questions of computation and computational complexity. The use of a computational heuristic is really not that problematic and it can help sidestep truly hard problems (i.e. NP Complete and the like). The difficult question is how and under what conditions one should select among the available set of such heuristics.

More realistic representations of these complex systems are possible, however, it requires scholars to consider jettisoning analytical approaches/solutions. When modeling complex adaptive systems far more granularity is possible but this requires a direct consideration of questions of computation and computational complexity. The use of a computational heuristic is really not that problematic and it can help sidestep truly hard problems (i.e. NP Complete and the like). The difficult question is how and under what conditions one should select among the available set of such heuristics.

It is important to note, the dominant paradigm was itself a heuristic representation of agent behavior (and a useful one). While there are still some true believers, a declining number of serious scholars still assert that individuals are actually perfect rational maximizers. At best, this assumption is a useful guidepost for agent behavior and is one which can be subjected to revision by continued work in behavioral economics and neuroeconomics.

For those looking for a genuine intellectual arbitrage opportunity … the path is clear … devote your time to filling the space as this is a space with significant potential returns. The way forward is to remix traditional approaches with leading findings in neuroscience, psychology, institutional analysis and most importantly computer science … winner gets a call from Sweden in about t+25.

OMnI / Shodor Workshop @ Oberlin Computer Science

Along with several other colleagues from Michigan CSCS, Mike, Jon and I are working at the 2010 OMnI / Shodor Workshop. The workshop is being run out of the Computer Science Department at Oberlin College and is designed to introduce various members of the Oberlin faculty to mathematical modeling and computational thinking. We just completed the first day and are looking forward to a great week!

NetSci 2010 — MIT Media Lab

Tomorrow is the first day of presentations at NetSci2010. Our paper will presented in AM Network Measures Panel. Anyway, for those interested in leveraging network science to study the dynamics of large social and physical systems — the conference promises a fantastic lineup of speakers. Check out the program!

What Computer Science Can Teach Economics …

Above is a link to a new release highlighting Constantinos Daskalakis and his important work. Together with Paul Goldberg and Christos Papadimitriou, Constantinos received the Game Theory and Computer Science Prize for his paper “The Complexity of Computing a Nash Equilibrium.” The prize is awarded once every four years at the World Congress of the Game Theory Society. A shorter and simpler version of the paper is available here.

The question of what computer science can teach social science disciplines such as economics is a constant discussion here at Michigan CSCS. I would say the consensus is that there exists real opportunities for meaningful cross-fertilization. For example, take the results presented in this paper. Now, consider a group of agents/players with ranges of different cognitive limitations and information sets playing all types of different games on a multi-dimensional graphs …. now imagine we did not assume the system in question had a fixed point attractor. Rather, assume we allowed for the possibility of a limit cycle attractor or even a strange attractor. Under such conditions, the richness of the problem might encourage one to put aside their pencil and paper and consider what computation might be able to bring to the problem ….

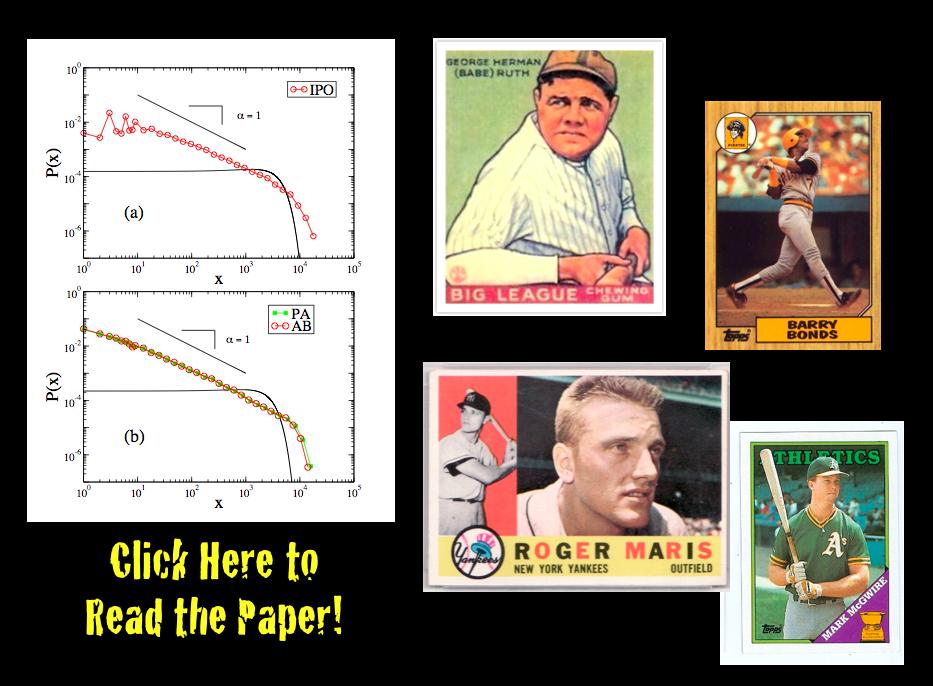

Detrending Career Statistics in Professional Baseball: Accounting for the Steroids Era and Beyond

From the abstract: “There is a long standing debate over how to objectively compare the career achievements of professional athletes from different historical eras. Developing an objective approach will be of particular importance over the next decade as Major League Baseball (MLB) players from the “steroids era” become eligible for Hall of Fame induction. Here we address this issue, as well as the general problem of comparing statistics from distinct eras, by detrending the seasonal statistics of professional baseball players. We detrend player statistics by normalizing achievements to seasonal averages, which accounts for changes in relative player ability resulting from both exogenous and endogenous factors, such as talent dilution from expansion, equipment and training improvements, as well as performance enhancing drugs (PED). In this paper we compare the probability density function (pdf) of detrended career statistics to the pdf of raw career statistics for five statistical categories — hits (H), home runs (HR), runs batted in (RBI), wins (W) and strikeouts (K) — over the 90-year period 1920-2009. We find that the functional form of these pdfs are stationary under detrending. This stationarity implies that the statistical regularity observed in the right-skewed distributions for longevity and success in professional baseball arises from both the wide range of intrinsic talent among athletes and the underlying nature of competition. Using this simple detrending technique, we examine the top 50 all-time careers for H, HR, RBI, W and K. We fit the pdfs for career success by the Gamma distribution in order to calculate objective benchmarks based on extreme statistics which can be used for the identification of extraordinary careers.”

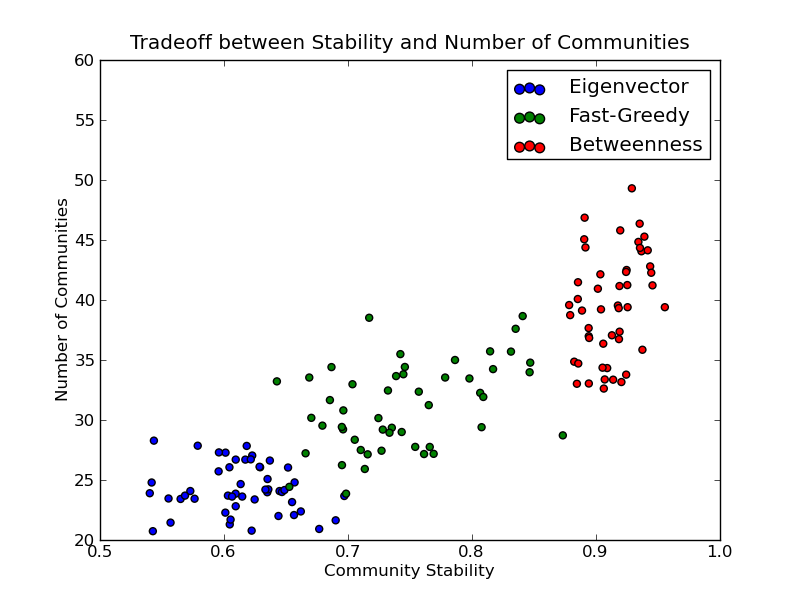

On the Stability of Community Detection Algorithms on Longitudinal Citation Data: Paper & New Code

Last summer, Dan, Jon and I wrote our conference paper for ASNA 2009 entitled On the Stability of Community Detection Algorithms on Longitudinal Citation Data. The purpose of this paper was to experimentally explore a number of properties of community detection algorithms, especially as applied to citation networks (c.f. Supreme Court, Tax Court, United States Code).

The model is a fairly simple discrete-time directed growing graph. At time 0, we create a completely disconnected graph with some initial number of vertices. The number of new vertices after time 0 is then modeled by a homogeneous Poisson process. For each of these new vertices, we also model the number of edges per vertex as IID Poisson distributions. The probability distribution over these edges is specified by a modified preferential attachment mechanism.

Most of our questions consider what we’ve called the average pairwise stability. This can be thought of as answering the following question: “what is the probability that Alice and Bob are friends tomorrow if they were friends today?” Here, friendship corresponds to sharing the same community. By asking this question for all pairs of vertices (dyads) for all time steps in which both vertices existed, we get a probability that a community dyad is preserved from step to step. It is important to note that we’re not claiming all algorithms applied to all systems should have high average pairwise stability. In fact, for systems that involve dynamics like random rewiring, the only way to get high pairwise stability is to put all vertices in the same community or their own community at all steps – obviously trivial and unhelpful solutions in practice.

Given this model and this conception of stability, we want to perform the following experiments:

- How do the edge-betweenness, fast greedy, and leading eigenvector community detection algorithms compare in terms of their average pairwise stability…

- for varying levels of preferential attachment?

- for varying vertex and edge rates?

- Is there a significant tradeoff between the number of communities and the average pairwise stability of these community detection algorithms?

The answers are yes, yes, and yes! You should read the paper for more details.

I’ve also produced some code to help you assess the average pairwise stability for your dataset. The code requires igraph and is only in Python at this point due to an issue with R’s vertex label handling (which I can hopefully work around). You can get the average pairwise stability methods on github and check out the example below:

The Data Deluge [Via The Economist]

The cover story of this week’s Economist is entitled The Data Deluge. This is, of course, a favorite topic of the hosts of this blog. While a number of folks have already highlighted this trend, we are happy to see a mainstream outlet such the Economist reporting on the era of big data. Indeed, the convergence of rapidly increasing computing power, and decreasing data storage costs, on one side, and large scale data collection and digitization on the other … has already impacted practices in the business, government and scientific communities. There is ample reason to believe that more is on the way.

In our estimation, for the particular class of questions for which data is available, two major implications of the deluge are worth reiterating: (1) no need to make assumptions about the asymptotic performance of a particular sampling frames when population level data is readily available; and (2) what statistical sampling was to the 20th century, data filtering may very well be to the 21st ….

Science Magazine Policy Forum: Accessible Reproducible Research

From the Abstract … “Scientific publications have at least two goals: (i) to announce a result and (ii) to convince readers that the result is correct. Mathematics papers are expected to contain a proof complete enough to allow knowledgeable readers to fill in any details. Papers in experimental science should describe the results and provide a clear enough protocol to allow successful repetition and extension.” (Institutional or Individual Subscription Required).