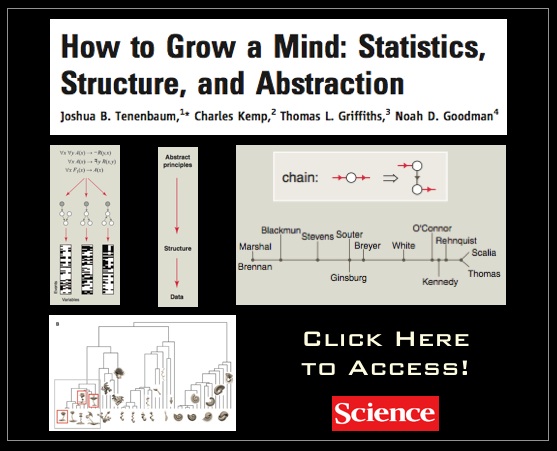

From the abstract: “In coming to understand the world—in learning concepts, acquiring language, and grasping causal relations—our minds make inferences that appear to go far beyond the data available. How do we do it? This review describes recent approaches to reverse-engineering human learning and cognitive development and, in parallel, engineering more humanlike machine learning systems. Computational models that perform probabilistic inference over hierarchies of flexibly structured representations can address some of the deepest questions about the nature and origins of human thought: How does abstract knowledge guide learning and reasoning from sparse data? What forms does our knowledge take, across different domains and tasks? And how is that abstract knowledge itself acquired?”

Tag: psychology

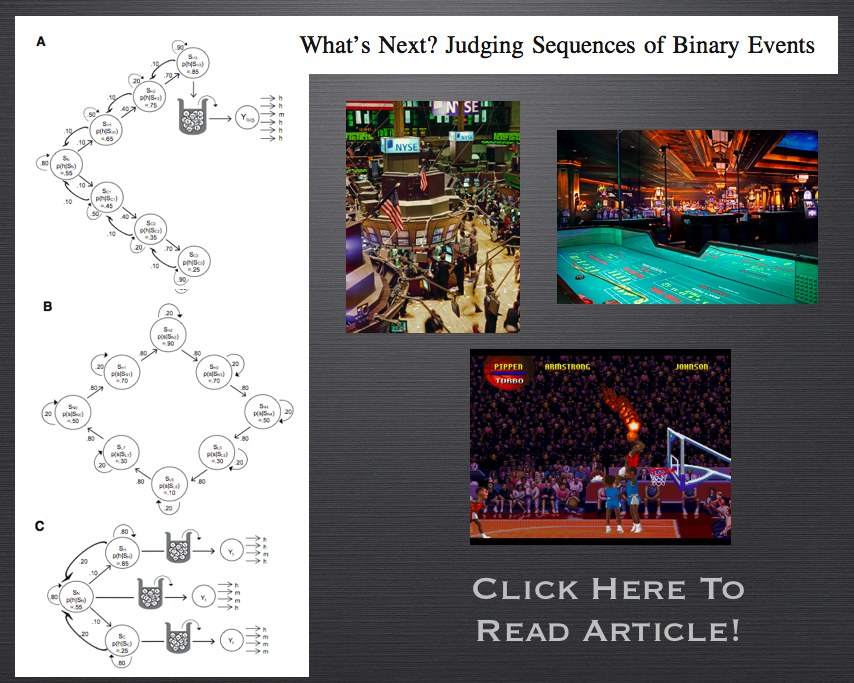

What’s Next? Judging Sequences of Binary Events [HT Paul Kedrosky]

From the abstract … “The authors review research on judgments of random and nonrandom sequences involving binary events with a focus on studies documenting gambler’s fallacy and hot hand beliefs. The domains of judgment include random devices, births, lotteries, sports performances, stock prices, and others. After discussing existing theories of sequence judgments, the authors conclude that in many everyday settings people have naive complex models of the mechanisms they believe generate observed events, and they rely on these models for explanations, predictions, and other inferences about event sequences. The authors next introduce an explanation-based, mental models framework for describing people’s beliefs about binary sequences, based on 4 perceived characteristics of the sequence generator: randomness, intentionality, control, and goal complexity. Furthermore, they propose a Markov process framework as a useful theoretical notation for the description of mental models and for the analysis of actual event sequences.”