Update: For his thoughts on possible implications in the market for legal services, check out Larry Ribstein’s post “Lawyer’s in Jeopardy” over at Truth on the Market. In a related vein, check out today’s WSJ Is Your Job an Endangered Species? The subtitle reads: “Technology is eating jobs—and not just obvious ones like toll takers and phone operators. Lawyers and doctors are at risk as well.”

Tag: computer science

The AI Revolution Is On [ Via Wired Magazine ]

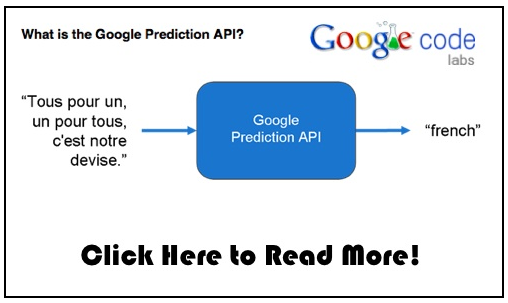

From the Full Article: “AI researchers began to devise a raft of new techniques that were decidedly not modeled on human intelligence. By using probability-based algorithms to derive meaning from huge amounts of data, researchers discovered that they didn’t need to teach a computer how to accomplish a task; they could just show it what people did and let the machine figure out how to emulate that behavior under similar circumstances. … They don’t possess anything like human intelligence and certainly couldn’t pass a Turing test. But they represent a new forefront in the field of artificial intelligence. Today’s AI doesn’t try to re-create the brain. Instead, it uses machine learning, massive data sets, sophisticated sensors, and clever algorithms to master discrete tasks. Examples can be found everywhere …”

Introduction to Computing for Complex Systems — ICPSR 2010 — My Full Course Slides Available Online!

I am going to bump this post to front of the blog one last time as there has been some interest in this material. It has now been several weeks since we completed the full four week class here at the ICPSR Program in Quantitative Methods. In this course, I (together with my colleagues) highlight the methods of complex systems as well as several environments designed to explore the field. These include Netlogo (agent based models and network models), Vensim (system dynamics / ecological modeling) and Pajek (empirical network analysis). In the final week, we cover a variety of advanced topics:

- (a) Community Detection in Networks

- (b) Computational Linguistics / Natural Language Processing

- (c) Diffusion Models and Mathematical Modeling with Data

- (d) Exponential Random Graph (p*) Models

- (e) Information Retrieval / Webscraping

Although, we do not work with more advanced languages within the course, those who need to conduct complex analysis are directed to alternatives such as R, Python, Java, etc.

Anyway, the slides are designed to be fully self-contained and thus allow for individually paced study of the relevant material. If you work through the slides carefully you should be able to learn the software as well as many of the core principles associated with the science of complex systems. The material should be available online indefinitely. If you have questions, feel free to email me.

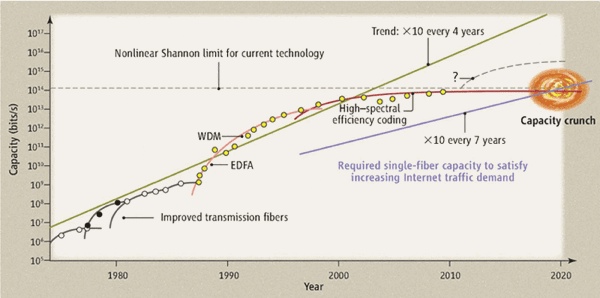

“Peak Data” or “The Capacity Crunch” [From Science]

From this week’s issue of Science comes Filling the Light Pipe by David J. Richardson. This is an important article highlighting a serious challenge facing the both the scientific and policy community.

From the abstract: “It has been a landmark year for the field of optical telecommunications, with the award of the 2009 Nobel Prize to Charles Kao for his insight in the mid-1960s that the future of communications lay in single-mode silica-based optical fibers (1) as well as the 50th anniversary of the first demonstration of the laser—both key technologies responsible for the development of the global-scale communications networks of today (2). Recently, however, a growing realization has emerged within the telecommunications industry that the end of the phenomenal growth in optical fiber communication capacity is within sight. At this year’s Optical Fiber Communication Conference (OFC 2010), several groups reported results within a factor of  2 of the ultimate capacity limits of existing optical fiber technology. Without radical innovation in our physical network infrastructure—that is, improvements in the key physical properties of transmission fibers and the optical amplifiers that we rely on to transmit data over long distances—we face what has been widely referred to as a “capacity crunch” that could severely constrain future Internet growth, as well as having social and political ramifications.”

2 of the ultimate capacity limits of existing optical fiber technology. Without radical innovation in our physical network infrastructure—that is, improvements in the key physical properties of transmission fibers and the optical amplifiers that we rely on to transmit data over long distances—we face what has been widely referred to as a “capacity crunch” that could severely constrain future Internet growth, as well as having social and political ramifications.”

[HT to Paul Kedrosky]

Recorded Future – A Temporal Analytics Engine

The Recorded Future Temporal Analytics Engine relies upon three steps to serve up information:

1. Scour the web: We continually scan thousands of news publications, blogs, niche sources, trade publications, government web sites, financial databases and more.

2. Extract, rank and organize: We extract information from text including entities, events, and the time that these events occur. We also measure momentum for each item in our index, as well as sentiment.

3. Make it accessible and useful: You can explore the past, present and predicted future of almost anything. Powerful visualization tools allow you to quickly see temporal patterns, or link networks of related information.

Riders on a Swarm — Might Mimicking the Behavior of Ants, Bees & Birds Be the Key to Artificial Intelligence?

This week’s issue of the Economist has an interesting article entitled Riders on a Swarm. Among other things, the article discusses how attempts to computationally model ant, bee and bird behavior have offered insight into major problems in artificial intelligence.

For those not familiar, the examples discussed within the article are classic models in the science of complex systems. For example, here is the Netlogo implementation of bird flocking. It will run in your browser but requires Java 4.1 or higher. If you decide to take a look — please click setup – then go to make the model run. Once inside the Netlogo GUI, you can explore how various parameter configurations impact the model’s outcomes.

One of the major insights of the bird flocking model is how random starting conditions and local behavioral rules can lead to the emergence of observed behavioral patterns that appear (at least on first glance) to be orchestrated by some sort of top down command structure.

This is, of course, not the case. The model is bottom up and not top down. Both the simplicity and the bottom up flavor of the model are apparent when you explore the model’s code. For those interested, I will take a second and plug the slides from my ICPSR class. In the class, I dedicated about an hour of class time to bird flocking model. Click here for the slides. In the slides, I walk through some of the important features of the code (discussion starts on slide 16).

P ≠ NP ? [ Vinay Deolalikar from HP Labs Publishes His Proof to the Web, $1Million Clay Institute Prize May Very Well Await ]

UPDATED VERSION HAS BEEN PUBLISHED TO THE WEB (August 9, 2010) [Click Here!]

After sending his paper to several leading researchers in the field and acquiring support, Vinay Deolalikar from HP Labs has recently published P ≠ NP to the web. While it has yet to be externally verified by folks such as the Clay Mathematics Institute, it looks very promising. Indeed, this very well represent a Millennium Prize for Mr. Deolalikar. For those interested in additional information, check out Greg Baker’s blog (which broke the story). Very exciting!

For initial thoughts on the matter by Dick Lipton, see the latest post on the blog Gödel’s Lost Letter and P=NP.

To read more about the history and importance of P vs. NP, please consult these sources:

- P versus NP Problem (Wikipedia)

- Stephen Cook, The P versus NP Problem

- Madhu Sudan, The P versus NP Problem

- Lance Fortnow, The Status of the P versus NP Problem

- Stephen Cook, The Importance of the P versus NP Question

“Agents of Change” — Agent Based Models and Methods [ Via The Economist ]

This week’s “economic focus” in the Economist highlights Agent Based Modeling as an alternative to traditional economic models and methods. As I am currently teaching Agent Based approaches to modeling as part of the ICPSR Introduction to Computing for Complex Systems, I am quite pleased to see this coverage. Indeed, the timing could not be better and I plan to highlight this article in the course!

Here are some highlights from the article: “… Agent-based modelling does not assume that the economy can achieve a settled equilibrium. No order or design is imposed on the economy from the top down. Unlike many models, ABMs are not populated with “representative agents”: identical traders, firms or households whose individual behaviour mirrors the economy as a whole. Rather, an ABM uses a bottom-up approach which assigns particular behavioural rules to each agent. For example, some may believe that prices reflect fundamentals whereas others may rely on empirical observations of past price trends. Crucially, agents’ behaviour may be determined (and altered) by direct interactions between them, whereas in conventional models interaction happens only indirectly through pricing. This feature of ABMs enables, for example, the copycat behaviour that leads to “herding” among investors. The agents may learn from experience or switch their strategies according to majority opinion. They can aggregate into institutional structures such as banks and firms …” For those who are interested, I have made similar points in the post “Complex Models for Dynamic Time Evolving Landscapes –or– Herb Gintis Offers a Strong Rebuke of “Meltdown.“

Julian Assange: Why the World Needs WikiLeaks [ TED 2010 ]

Love it or hate it … WikiLeaks has been in the news quite a bit lately. In this TED talk, Founder Julian Assange sits down with Chris Anderson to discuss WikiLeaks. From the talk description … “The controversial website WikiLeaks collects and posts highly classified documents and video. Founder Julian Assange, who’s reportedly being sought for questioning by US authorities, talks to TED’s Chris Anderson about how the site operates, what it has accomplished — and what drives him. The interview includes graphic footage of a recent US airstrike in Baghdad.”

Harambeenet 2010 @ Duke Computer Science Department

Today — Mike, Jon and I are at the 2010 Harambeenet Conference here in the Duke Computer Science Department. The conference is centered upon network science and computer science education. It features lots of interdisciplinary scholarship and applications of computer science techniques in novel domains. We are looking forward to an interesting couple of days of discussion!

John Underkoffler Points to the Future of UI [ Ted 2010 ]

“When Tom Cruise put on his data glove and started whooshing through video clips of future crimes, how many of us felt the stirrings of geek lust? This iconic scene in Minority Report marked a change in popular thinking about interfaces — showing how sexy it could be to use natural gestures, without keyboard, mouse or command line. John Underkoffler led the team that came up with this interface, called the g-speak Spatial Operating Environment. His company, Oblong Industries, was founded to move g-speak into the real world. Oblong is building apps for aerospace, bioinformatics, video editing and more. But the big vision is ubiquity: g-speak on every laptop, every desktop, every microwave oven, TV, dashboard. ‘It has to be like this,” he says. “We all of us every day feel that. We build starting there. We want to change it all.’ Before founding Oblong, Underkoffler spent 15 years at MIT’s Media Laboratory, working in holography, animation and visualization techniques, and building the I/O Bulb and Luminous Room Systems.”