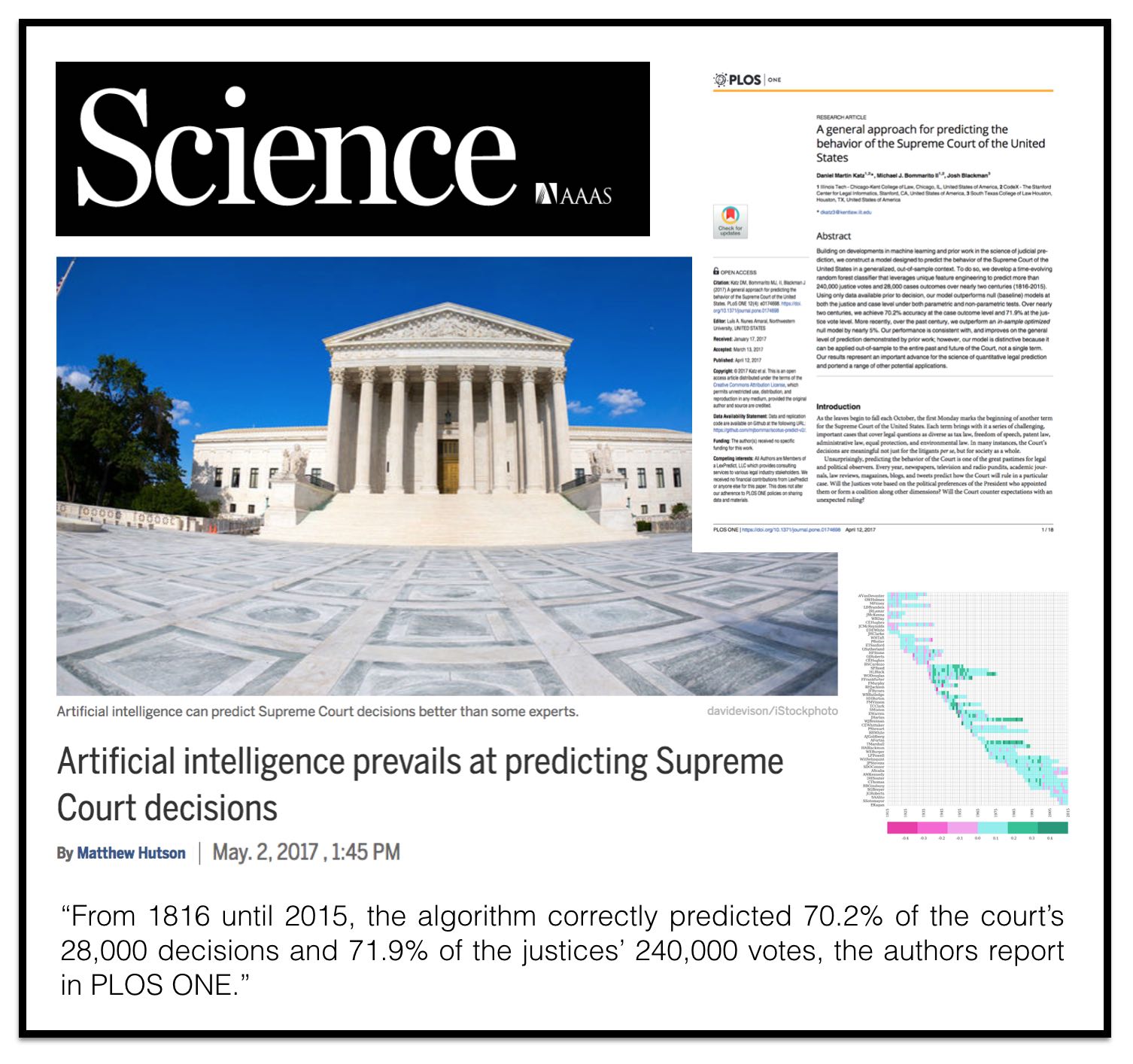

Excited to see coverage of our Plos One #SCOTUS Prediction paper in Science Magazine (via Science News).

Excited to see coverage of our Plos One #SCOTUS Prediction paper in Science Magazine (via Science News).

Tag: artificial intelligence and law

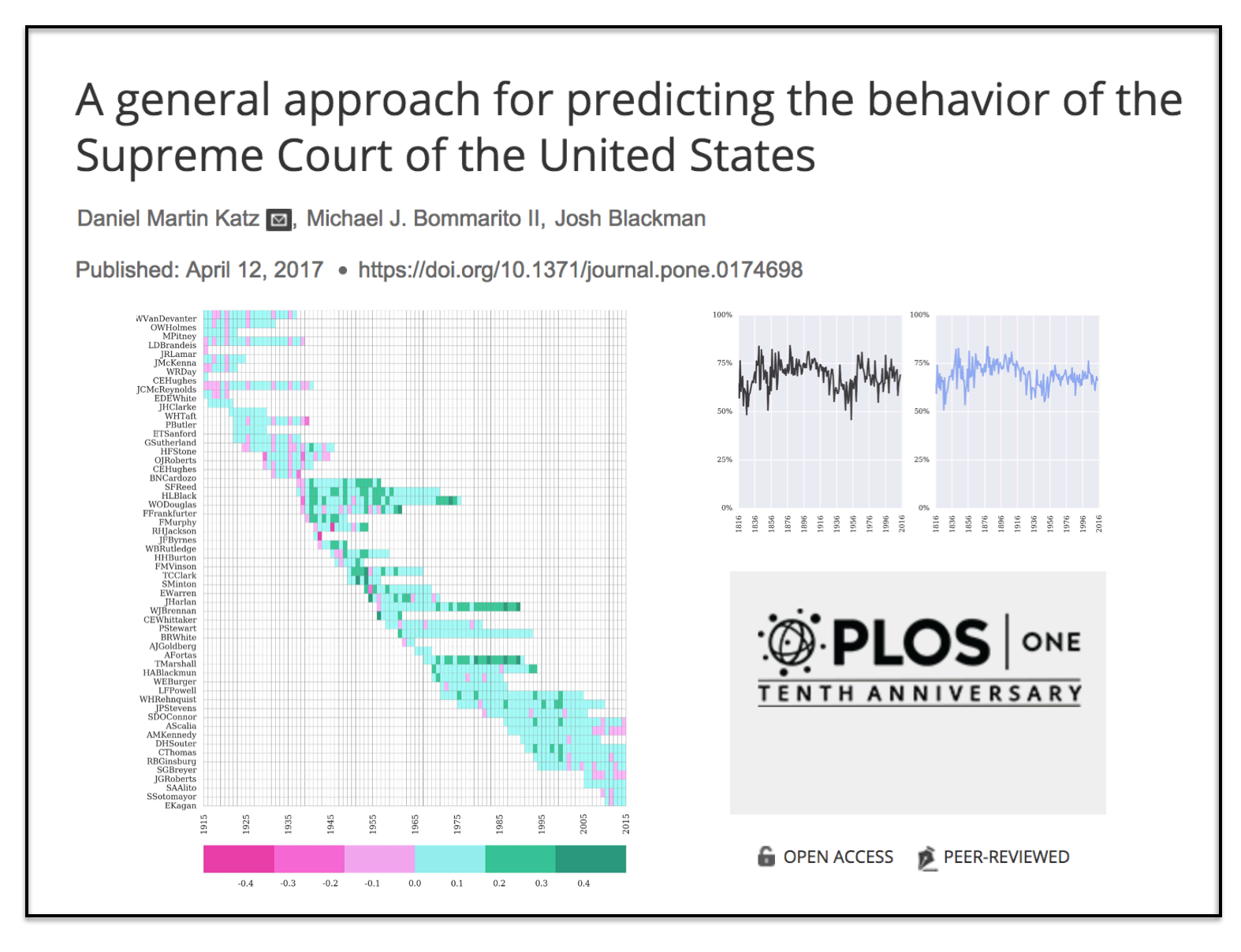

A General Approach for Predicting the Behavior of the Supreme Court of the United States (PLOS One) – Final Version April 2017

Our SCOTUS Prediction Paper is now live in Plos One (one of my favorite journals) — very happy about this (thanks to Luís A. Nunes Amaral of Northwestern University for serving as our Editor). #OpenSourceScience #SCOTUS #LegalAnalytics #LegalData #QuantitativeLegalPrediction

Our SCOTUS Prediction Paper is now live in Plos One (one of my favorite journals) — very happy about this (thanks to Luís A. Nunes Amaral of Northwestern University for serving as our Editor). #OpenSourceScience #SCOTUS #LegalAnalytics #LegalData #QuantitativeLegalPrediction

FutureLaw 2017 at Stanford CodeX

Yesterday was the 5th Annual Future Law Conference at Stanford CodeX. As always, it was an exciting day to see the best in cutting edge technology (including chatbots, predictive analytics and rules based A.I.). I moderated a morning panel entitled The Perils and Promise of Predictive Analytics in Law. Overall – it is clear that the community is growing both domestically and abroad.

Yesterday was the 5th Annual Future Law Conference at Stanford CodeX. As always, it was an exciting day to see the best in cutting edge technology (including chatbots, predictive analytics and rules based A.I.). I moderated a morning panel entitled The Perils and Promise of Predictive Analytics in Law. Overall – it is clear that the community is growing both domestically and abroad.

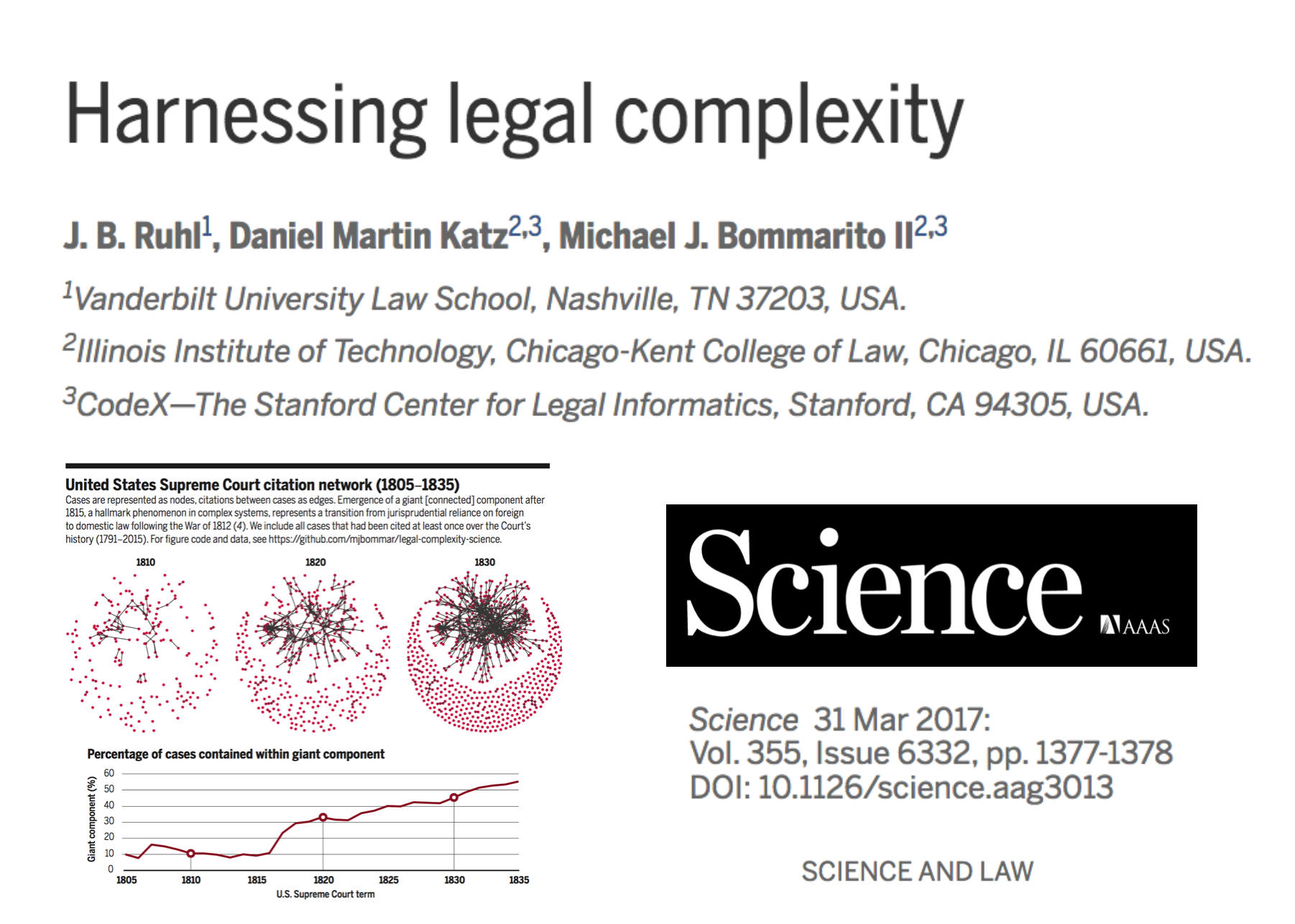

Harnessing Legal Complexity – Bring Tools of Complexity Science to Bear on Improving Law (Ruhl, Katz & Bommarito in Science Magazine)

We have been working in the field of Law + Complex Systems for more than a decade (starting during the time that Mike Bommarito and I were graduate students at the University of Michigan Center for the Study of Complex Systems) – today we took a big step forward with publication our article in the March 31 Edition of Science Magazine. It was a great pleasure to work with J.B. Ruhl & Michael Bommarito on this paper!

A Clickbaity Title but a More Reasonable Set of Content — The Robot Lawyer Thesis / Artificial Intelligence and Law in the New York Times

A more measured article than what we have seen lately regarding the so called ‘Robot Lawyers Thesis.’ I find it pretty funny that the NY Times Facebook link leads to the Click-Bait title but the final online version has the more measured title (see image above).

A more measured article than what we have seen lately regarding the so called ‘Robot Lawyers Thesis.’ I find it pretty funny that the NY Times Facebook link leads to the Click-Bait title but the final online version has the more measured title (see image above).

The article certainly has an Enterprise Law / Big Law undertone. If we focus on this subset of the market for legal services, there are a number of collective trends which together are transforming the market. It is the combined cocktail that is potent …

Here are five of them:

(1) Legal Outsourcing

(2) Insourcing and the Growth of Corporate Legal Departments

(3) Process Improvement (Lean / Six Sigma)

(4) Automation of Legal Tasks using A.I. (a.k.a. robot lawyers)

(5) Financialization of the Law aka #Fin(Legal)Tech

Plenty has been written about legal outsourcing, insourcing, and the growth of corporate legal departments and the application of process improvement methods (Lean / Six Sigma).

With respect to automation, it is curious to see the Times cite the Remus / Levy paper. At best, this paper is only relevant to the automation of the fraction of the work that is undertaken in Big Law (drawing from data from several years ago). They suggest an ‘automation rate’ of 2.5% per year. If that were to continue – this implies a rate for the decade of 25% just in Big Law alone. Again, this does not focus upon the other market dynamics highlighted above.

It is worth noting their data comes from a period before the implementation of #MLaaS (Machine Learning as a Service). Since its inception, #MLaaS has made A.I. tools far cheaper to custom build to problems. I have said recently that the best in legal tech has yet to be built (see slide 260).

So thanks to the NY Times for shedding light on this field. But lets remember the #RobotLawyers Thesis is only a small part of the puzzle.

As a matter of strategy, some element of the #LegalInnovation agenda should be part of the strategic portfolio of every legal organization (law firm, law school, corporate legal dept, etc.) Why? Because those who do so can increase their standing in the relevant market in question. Only those who use the newest and best tools available will thrive in an ever-changing market.

Artificial Intelligence and Law : A Six Part Primer – Professor Daniel Martin Katz (Updated Version 03.17.17)

An Updated Version of Artificial Intelligence and Law : A Six Part Primer

Why Artificial Intelligence Might Replace Your Lawyer (via OYZ)

“It’s the alignment of tech and economics that is allowing all this stuff to start moving … The real roll-up of all this isn’t robot lawyers, its financialization, with law becoming an applied branch of finance and insurance” says Daniel Martin Katz, professor at Illinois Tech’s Chicago Kent College of Law.

“It’s the alignment of tech and economics that is allowing all this stuff to start moving … The real roll-up of all this isn’t robot lawyers, its financialization, with law becoming an applied branch of finance and insurance” says Daniel Martin Katz, professor at Illinois Tech’s Chicago Kent College of Law.

Program Chair and Speaker at the Plenary Presidential Summit @ New York State Bar Association Annual Meeting – Artificial Intelligence and its Impact on the Legal Profession –

I am pleased to serve as a Program Chair and Speaker at the Plenary Presidential Summit @ New York State Bar Association Annual Meeting. Today’s topic will be Artificial Intelligence and its Impact on the Legal Profession. Joining me on the panel are the following panelists covering the following topics:

What is Artificial Intelligence? What is Machine Learning?

Dera J. Nevin, eDiscovery Counsel, Proskauer

What are Some Applications of Artificial Intelligence, Machine Learning, and Predictive Analytics in Law?

Andrew M.J. Arruda, CEO & Co-Founder, Ross Intelligence

Daniel Martin Katz, J.D., Ph.D., Associate Professor of Law, Illinois Tech – Chicago Kent Law

What are the Labor Market Impacts? More Jobs, Less Jobs, Different Forms of Legal Jobs and Legal Work?

Noah Waisberg, J.D., Co-founder & CEO, Kira Systems

A General Approach for Predicting the Behavior of the Supreme Court of the United States (Paper Version 2.01) (Katz, Bommarito & Blackman)

Long time coming for us but here is Version 2.01 of our #SCOTUS Paper …

We have added three times the number years to the prediction model and now predict out-of-sample nearly two centuries of historical decisions (1816-2015). Then, we compare our results to three separate null models (including one which leverages in-sample information).

Here is the abstract: Building on developments in machine learning and prior work in the science of judicial prediction, we construct a model designed to predict the behavior of the Supreme Court of the United States in a generalized, out-of-sample context. Our model leverages the random forest method together with unique feature engineering to predict nearly two centuries of historical decisions (1816-2015). Using only data available prior to decision, our model outperforms null (baseline) models at both the justice and case level under both parametric and non-parametric tests. Over nearly two centuries, we achieve 70.2% accuracy at the case outcome level and 71.9% at the justice vote level. More recently, over the past century, we outperform an in-sample optimized null model by nearly 5%. Our performance is consistent with, and improves on the general level of prediction demonstrated by prior work; however, our model is distinctive because it can be applied out-of-sample to the entire past and future of the Court, not a single term. Our results represent an advance for the science of quantitative legal prediction and portend a range of other potential applications.

The Future of Law + Legal Technology @ Aviva Stadium – Dublin, Ireland

Enjoyed delivering the Keynote Address at the #FutureOfLaw Conference here at Aviva Stadium in Dublin, Ireland.

Thanks to Leman Solicitors and all of the sponsors for this wonderful event!