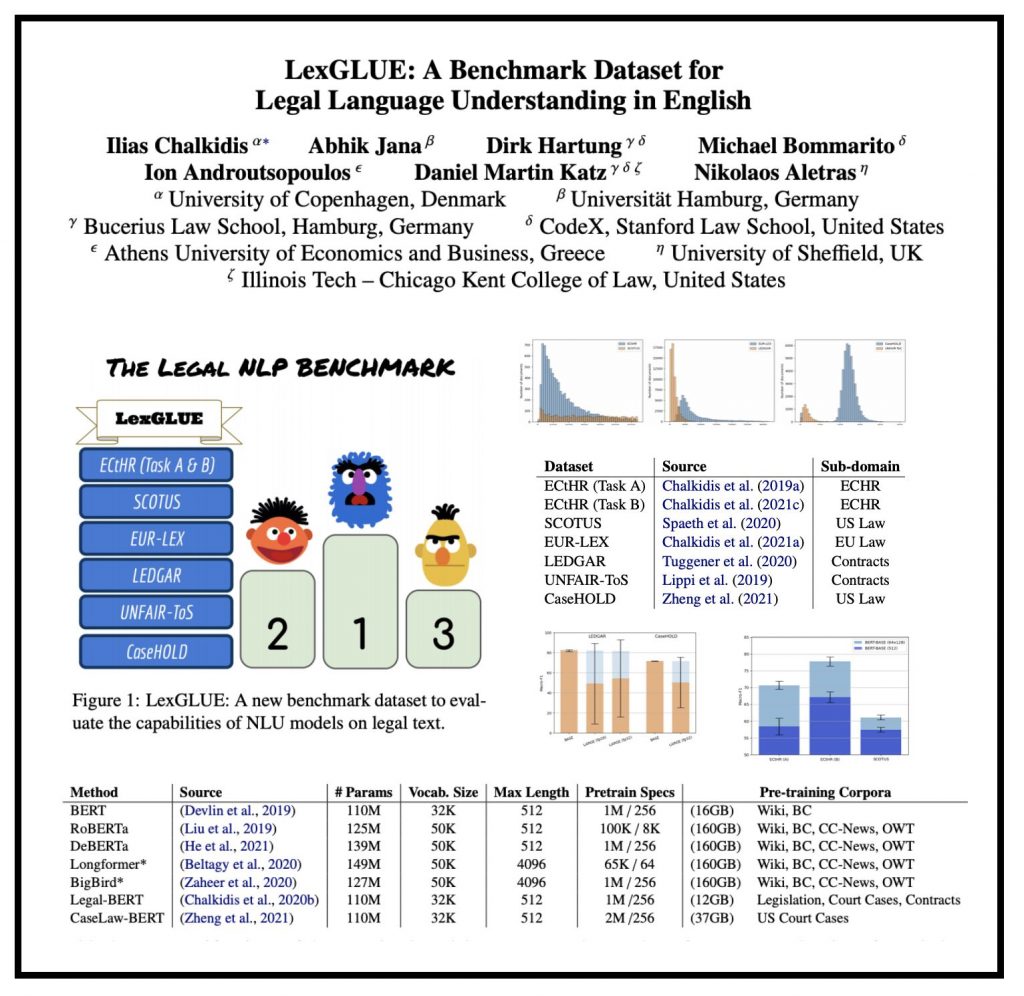

“LexGLUE: A Benchmark Dataset for Legal Language Understanding in English” … Pre-trained Transformers, including BERT (Devlin et al., 2019), GPT-3 (Brown et al., 2020), T5 (Raffel et al., 2020), BART (Lewis et al., 2020), DeBERTa (He et al., 2021) and numerous variants, are currently the state of the art in most natural language processing (NLP) tasks. The question is how to adapt these models to legal text. Inspired by the recent widespread use of the GLUE multi-task benchmark NLP dataset, we introduce LexGLUE, a benchmark dataset to evaluate the performance of NLP methods in legal tasks.

I am proud to have worked with this multi disciplinary research team — who collectively are among the leading NLP + Law Scholars in the world. ( Ilias Chalkidis, Abhik Jana, Dirk Hartung, Michael Bommarito, Ion Androutsopoulos, Daniel Katz, Nikolaos Aletras )

LexGLUE is based on seven English existing legal NLP datasets. However, we anticipate that more datasets, tasks, and languages will be added in later versions of LexGLUE. As more legal NLP datasets become available, we also plan to favor datasets checked thoroughly for validity (scores reflecting real-life performance), annotation quality, statistical power and social bias.